MBNMAdose for dose-response Model-Based Network Meta-Analysis

Hugo Pedder

2023-06-01

mbnmadose.RmdIntroduction

This vignette demonstrates how to use MBNMAdose to

perform Model-Based Network Meta-Analysis (MBNMA) of studies with

multiple doses of different agents by accounting for the dose-response

relationship. This can connect disconnected networks via the

dose-response relationship and the placebo response, improve precision

of estimated effects and allow interpolation/extrapolation of predicted

response based on the dose-response relationship.

Modelling the dose-response relationship also avoids the “lumping” of different doses of an agent which is often done in Network Meta-Analysis (NMA) and can introduce additional heterogeneity or inconsistency. All models and analyses are implemented in a Bayesian framework, following an extension of the standard NMA methodology presented by (Lu and Ades 2004) and are run in JAGS (version 4.3.0 or later is required) (2017). For full details of dose-response MBNMA methodology see Mawdsley et al. (2016). Throughout this vignette we refer to a treatment as a specific dose or a specific agent

This package has been developed alongside MBNMAtime, a

package that allows users to perform time-course MBNMA to incorporate

multiple time points within different studies. However, they should

not be loaded into R at the same time as there are a number of

functions with shared names that perform similar tasks yet are specific

to dealing with either time-course or dose-response data.

Workflow within the package

Functions within MBNMAdose follow a clear pattern of

use:

- Load your data into the correct format using

mbnma.network() - Analyse your data using

mbnma.run(), or any of the available wrapper dose-response functions - Test for consistency at the treatment-level using functions like

nma.nodesplit()andnma.run() - Examine model results using forest plots and treatment rankings

- Use your model to predict responses using

predict() - Calculate relative effects between any doses (even those not in the

original dataset) using

get.relative()

At each of these stages there are a number of informative plots that can be generated to help understand the data and to make decisions regarding model fitting.

Datasets Included in the Package

Triptans for migraine pain relief

triptans is from a systematic review of interventions

for pain relief in migraine (Thorlund et al.

2014). The outcome is binary, and represents (as aggregate data)

the number of participants who were headache-free at 2 hours. Data are

from patients who had had at least one migraine attack, who were not

lost to follow-up, and who did not violate the trial protocol. The

dataset includes 70 Randomised-Controlled Trials (RCTs), comparing 7

triptans with placebo. Doses are standardised as relative to a “common”

dose, and in total there are 23 different treatments (combination of

dose and agent). triptans is a data frame in long format

(one row per arm and study), with the variables studyID,

AuthorYear, N, r,

dose and agent.

| studyID | AuthorYear | n | r | dose | agent |

|---|---|---|---|---|---|

| 1 | Tfelt-Hansen P 2006 | 22 | 6 | 0 | placebo |

| 1 | Tfelt-Hansen P 2006 | 30 | 14 | 1 | sumatriptan |

| 2 | Goadsby PJ 2007 | 467 | 213 | 1 | almotriptan |

| 2 | Goadsby PJ 2007 | 472 | 229 | 1 | zolmitriptan |

| 3 | Tuchman M2006 | 160 | 15 | 0 | placebo |

| 3 | Tuchman M2006 | 174 | 48 | 1 | zolmitriptan |

Biologics for treatment of moderate-to-severe psoriasis

There are 3 psoriasis datasets from a systematic review of RCTs

comparing biologics at different doses and placebo (Warren et al. 2019). Each dataset contains a

different binary outcome, all based on the number of patients

experiencing degrees of improvement on the Psoriasis Area and Severity

Index (PASI) measured at 12 weeks follow-up. Each dataset contains

information on the number of participants who achieved \(\geq75\%\) (psoriasis75),

\(\geq90\%\)

(psoriasis90), or \(100\%\) (psoriasis100).

Selective Serotonin Reuptake Inhibitors (SSRIs) for major depression

ssri is from a systematic review examining the efficacy

of different doses of SSRI antidepressant drugs and placebo (Furukawa et al. 2019). The response to

treatment is defined as a 50% reduction in depressive symptoms after 8

weeks (4-12 week range) follow-up. The dataset includes 60 RCTs

comparing 5 different SSRIs with placebo.

kable(head(ssri), digits=2) | studyID | bias | age | weeks | agent | dose | n | r |

|---|---|---|---|---|---|---|---|

| 1 | Moderate risk | 43.0 | 6 | placebo | 0 | 149 | 69 |

| 1 | Moderate risk | 42.9 | 6 | fluoxetine | 20 | 137 | 77 |

| 2 | Low risk | 41.2 | 6 | placebo | 0 | 137 | 63 |

| 2 | Low risk | 40.9 | 6 | paroxetine | 20 | 138 | 74 |

| 7 | Low risk | 41.6 | 6 | placebo | 0 | 158 | 91 |

| 7 | Low risk | 41.3 | 6 | fluoxetine | 20 | 148 | 89 |

Interventions for Serum Uric Acid (SUA) reduction in gout

gout is from a systematic review of interventions for

lowering Serum Uric Acid (SUA) concentration in patients with gout

[not published previously]. The outcome is continuous, and

aggregate data responses correspond to the mean change from baseline in

SUA in mg/dL at 2 weeks follow-up. The dataset includes 10

Randomised-Controlled Trials (RCTs), comparing 5 different agents, and

placebo. Data for one agent (RDEA) arises from an RCT that is not

placebo-controlled, and so is not connected to the network directly. In

total there were 19 different treatments (combination of dose and

agent). gout is a data frame in long format (one row per

arm and study), with the variables studyID, y,

se, agent and dose.

| studyID | y | se | agent | dose | |

|---|---|---|---|---|---|

| 4 | 1102 | -0.53 | 0.25 | RDEA | 100 |

| 5 | 1102 | -1.37 | 0.18 | RDEA | 200 |

| 6 | 1102 | -1.73 | 0.25 | RDEA | 400 |

| 53 | 2001 | -6.82 | 0.06 | Febu | 240 |

| 54 | 2001 | 0.15 | 0.04 | Plac | 0 |

| 92 | 2003 | -3.43 | 0.03 | Allo | 300 |

Interventions for pain relief in osteoarthritis

osteopain is from a systematic review of interventions

for pain relief in osteoarthritis, used previously in Pedder et al.

(2019). The outcome is continuous, and

aggregate data responses correspond to the mean WOMAC pain score at 2

weeks follow-up. The dataset includes 18 Randomised-Controlled Trials

(RCTs), comparing 8 different agents with placebo. In total there were

26 different treatments (combination of dose and agent). The active

treatments can also be grouped into 3 different classes, within which

they have similar mechanisms of action. osteopain_2wkabs is

a data frame in long format (one row per arm and study), with the

variables studyID, agent, dose,

class, y, se, and

N.

| studyID | agent | dose | class | y | se | n | |

|---|---|---|---|---|---|---|---|

| 13 | 1 | Placebo | 0 | Placebo | 6.26 | 0.23 | 60 |

| 14 | 1 | Etoricoxib | 10 | Cox2Inhib | 5.08 | 0.16 | 114 |

| 15 | 1 | Etoricoxib | 30 | Cox2Inhib | 4.42 | 0.17 | 102 |

| 16 | 1 | Etoricoxib | 5 | Cox2Inhib | 5.34 | 0.16 | 117 |

| 17 | 1 | Etoricoxib | 60 | Cox2Inhib | 3.62 | 0.17 | 112 |

| 18 | 1 | Etoricoxib | 90 | Cox2Inhib | 4.08 | 0.17 | 112 |

Alogliptin for lowering blood glucose concentration in type II diabetes

alog_pcfb is from a systematic review of

Randomised-Controlled Trials (RCTs) comparing different doses of

alogliptin with placebo (Langford et al.

2016). The systematic review was simply performed and was

intended to provide data to illustrate a statistical methodology rather

than for clinical inference. Alogliptin is a treatment aimed at reducing

blood glucose concentration in type II diabetes. The outcome is

continuous, and aggregate data responses correspond to the mean change

in HbA1c from baseline to follow-up in studies of at least 12 weeks

follow-up. The dataset includes 14 RCTs, comparing 5 different doses of

alogliptin with placebo, leading to 6 different treatments (combination

of dose and agent) within the network. alog_pcfb is a data

frame in long format (one row per arm and study), with the variables

studyID, agent, dose,

y, se, and N.

| studyID | agent | dose | y | se | n |

|---|---|---|---|---|---|

| NCT01263470 | alogliptin | 0.00 | 0.06 | 0.05 | 75 |

| NCT01263470 | alogliptin | 6.25 | -0.51 | 0.08 | 79 |

| NCT01263470 | alogliptin | 12.50 | -0.70 | 0.06 | 84 |

| NCT01263470 | alogliptin | 25.00 | -0.76 | 0.06 | 79 |

| NCT01263470 | alogliptin | 50.00 | -0.82 | 0.05 | 79 |

| NCT00286455 | alogliptin | 0.00 | -0.13 | 0.08 | 63 |

Inspecting the data

Before embarking on an analysis, the first step is to have a look at

the raw data. Two features (network connectivity and dose-response

relationship) are particularly important for MBNMA. For this we want to

get our dataset into the right format for the package. We can do this

using mbnma.network().

# Using the triptans dataset

network <- mbnma.network(triptans)

#> Values for `agent` with dose = 0 have been recoded to `Placebo`

#> agent is being recoded to enforce sequential numbering and allow inclusion of `Placebo`

summary(network)

#> Description: Network

#> Number of studies: 70

#> Number of treatments: 23

#> Number of agents: 8

#> Median (min, max) doses per agent (incl placebo): 4 (3, 6)

#> Agent-level network is CONNECTED

#>

#> Ttreatment-level network is CONNECTED

#> This function takes a dataset with the columns:

-

studyIDStudy identifiers -

agentAgent identifiers (can be character, factor or numeric) -

doseNumeric data indicating the dose of the given agent within the study arm -

classAn optional column indicating a particular class code. Agents with the same name/identifier must also have the same class code.

Depending on the type of data (and the likelihood) the following columns are required:

- Normal likelihood

-

yNumeric data indicating the mean response for a given study arm -

seNumeric data indicating the standard error for a given study arm

-

- Binomial likelihood

-

rNumeric data indicating the number of responders in a given study arm -

nNumeric data indicating the total number of participants in a given study arm

-

- Poisson likelihood

-

rNumeric data indicating the number of events in a given study arm -

ENumeric data indicating the total exposure time in a given study arm

-

It then performs the following checks on the data:

- The dataset has the required column names

- There are no missing values

- All doses are positive

- All SE, r, N and E are positive

- Class labels are consistent within each agent

- Studies have at least two arms

- Studies do not only compare the same agent at the same dose

Finally it converts the data frame into an object of

class("mbnma.network"), which contains indices for study

arms, numeric variables for treatments, agents and classes, and stores a

vector of treatment, agent and class names as an element within the

object. By convention, agents are numbered alphabetically, though if the

original data for agents is provided as a factor then the factor codes

will be used. This then contains all the necessary information for

subsequent MBNMAdose functions.

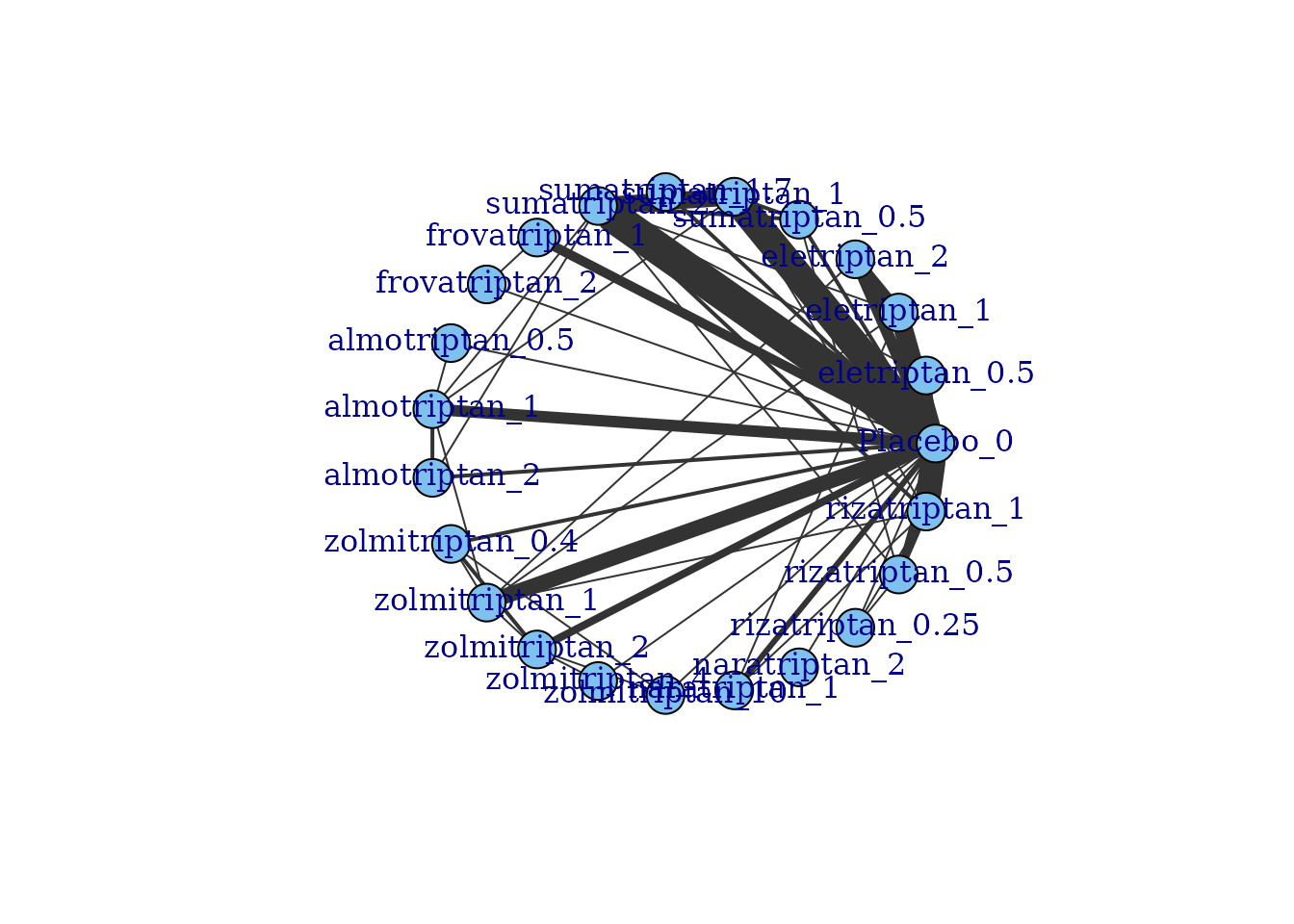

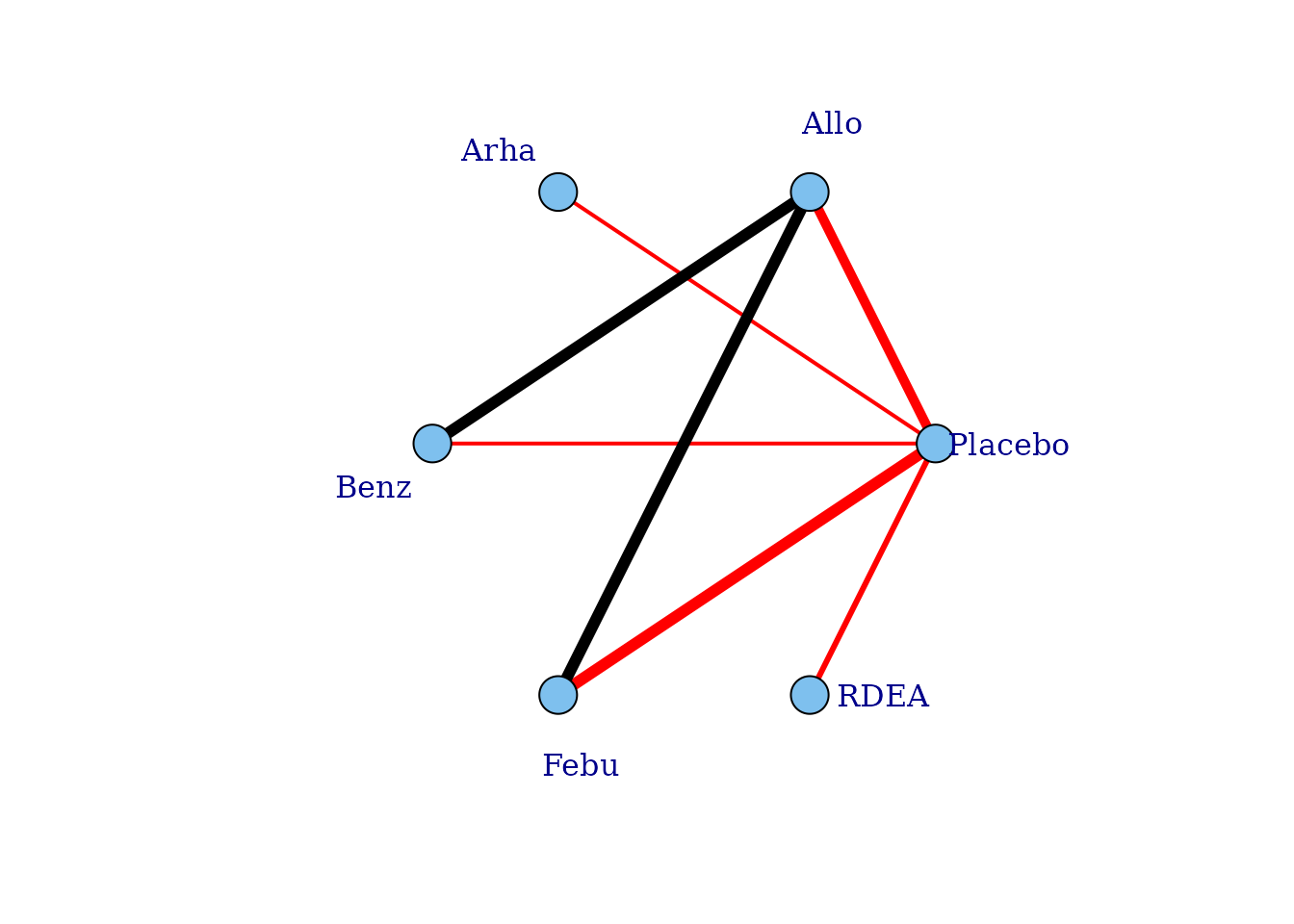

Examining network connectivity

Examining how the evidence in the network is connected and identifying which studies compare which treatments/agents helps to understand which effects can be estimated, what information will be helping to inform those estimates, and whether linking via the dose-response relationship is possible if the network is disconnected at the treatment-level. The complexity of dose-response relationships that can be estimated is dependent on the number of doses of each agent available, so this is also important to know.

Network plots can be plotted which shows which treatments/agents have

been compared in head-to-head trials. Typically the thickness of

connecting lines (“edges”) is proportional to the number of studies that

make a particular comparison and the size of treatment nodes

(“vertices”) is proportional to the total number of patients in the

network who were randomised to a given treatment/agent (provided

N is included as a variable in the original dataset for

mbnma.network()).

In MBNMAdose these plots are generated using

igraph, and can be plotted by calling plot().

The generated plots are objects of class("igraph") meaning

that, in addition to the options specified in plot(),

various igraph functions can subsequently be used to make

more detailed edits to them.

Within these network plots, vertices are automatically aligned in a circle (as the default) and can be tidied by shifting the label distance away from the nodes.

# Prepare data using the triptans dataset

tripnet <- mbnma.network(triptans)

#> Values for `agent` with dose = 0 have been recoded to `Placebo`

#> agent is being recoded to enforce sequential numbering and allow inclusion of `Placebo`

summary(tripnet)

#> Description: Network

#> Number of studies: 70

#> Number of treatments: 23

#> Number of agents: 8

#> Median (min, max) doses per agent (incl placebo): 4 (3, 6)

#> Agent-level network is CONNECTED

#>

#> Ttreatment-level network is CONNECTED

#>

# Draw network plot

plot(tripnet)

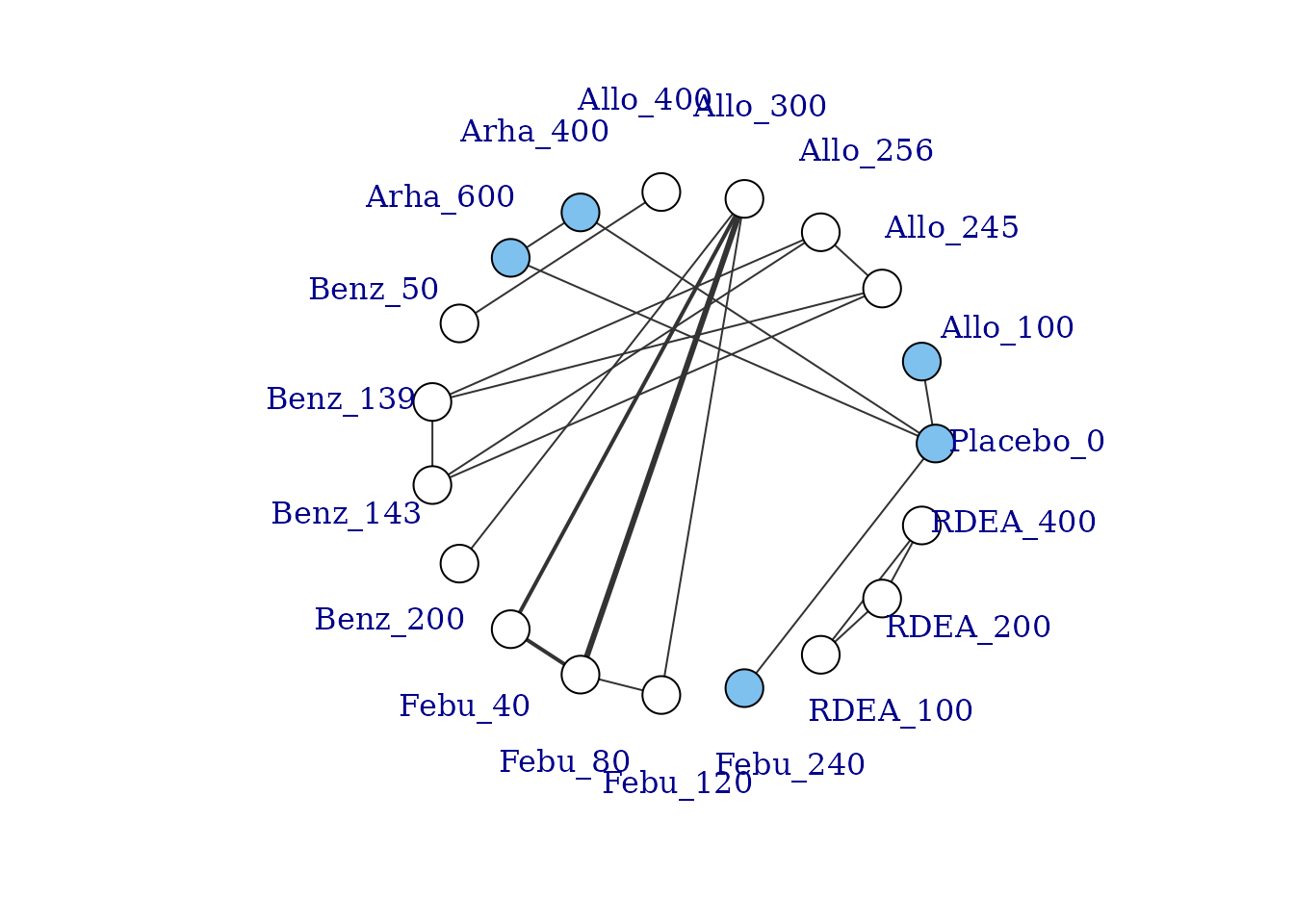

If some vertices are not connected to the network reference treatment through any pathway of head-to-head evidence, a warning will be given. The nodes that are coloured white represent these disconnected vertices.

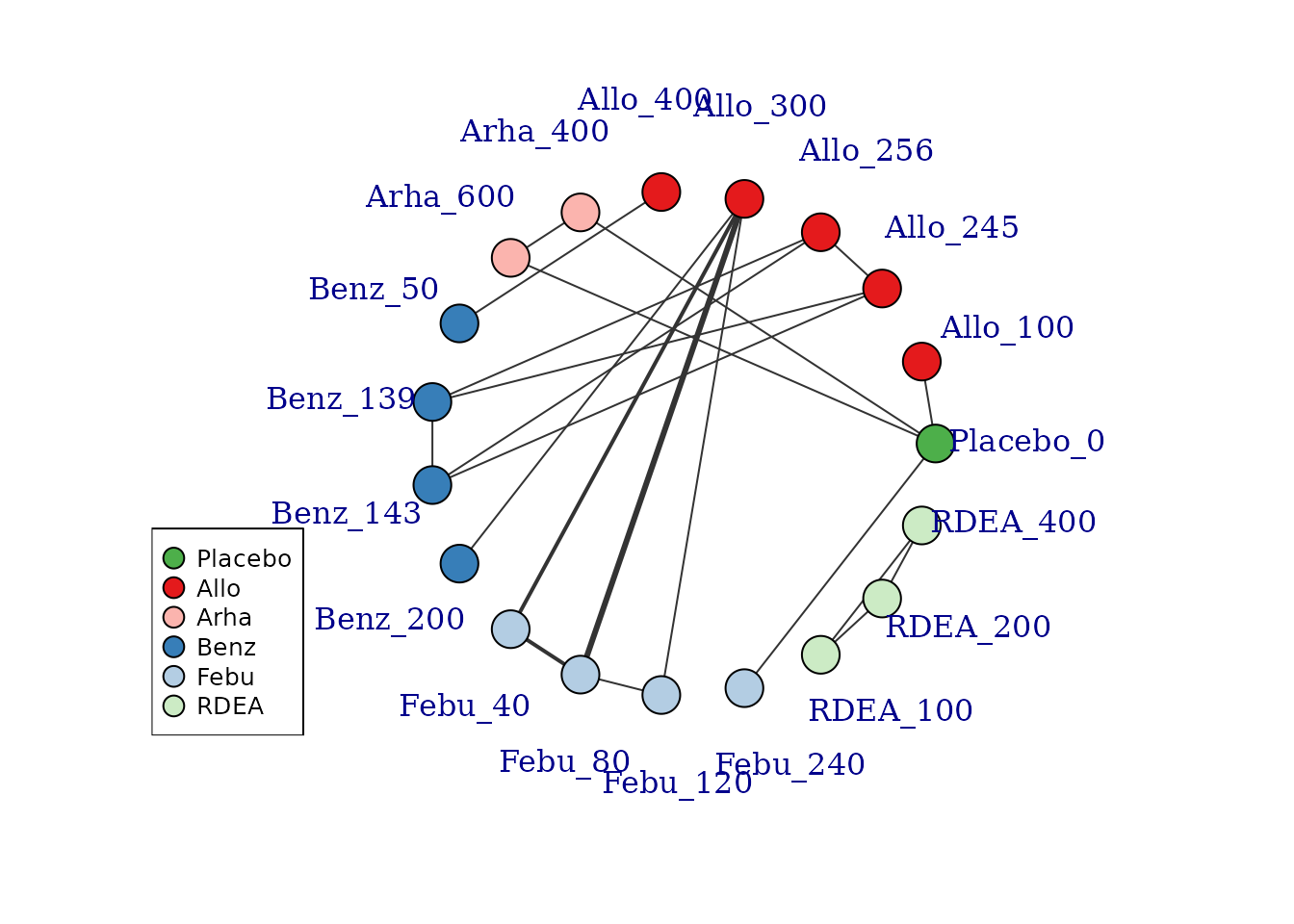

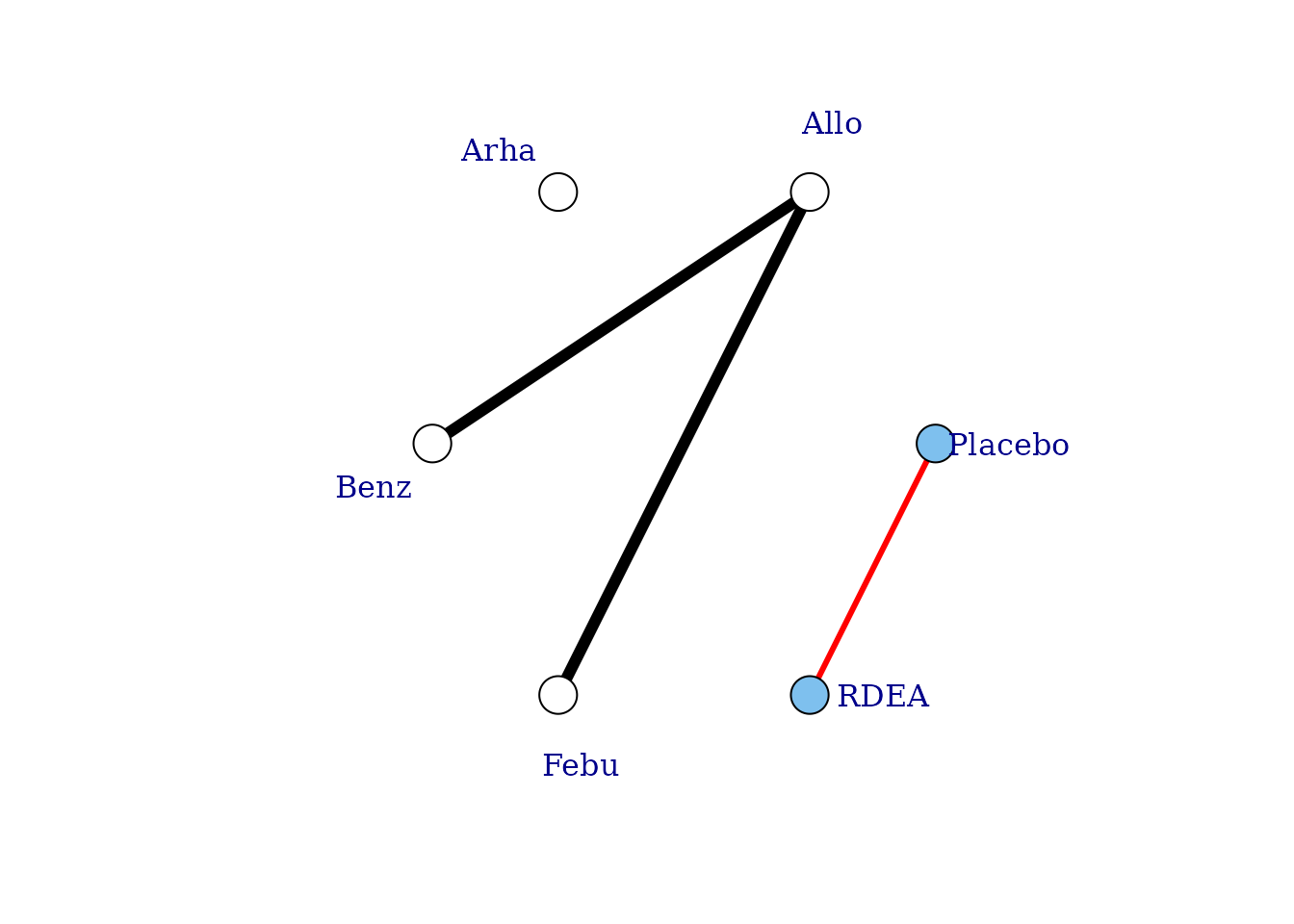

# Prepare data using the gout dataset

goutnet <- mbnma.network(gout)

summary(goutnet)

#> Description: Network

#> Number of studies: 10

#> Number of treatments: 19

#> Number of agents: 6

#> Median (min, max) doses per agent (incl placebo): 5 (3, 6)

#> Agent-level network is DISCONNECTED

#>

#> Treatment-level network is DISCONNECTED

#>

plot(goutnet, label.distance = 5)

#> Warning in check.network(g): The following treatments/agents are not connected

#> to the network reference:

#> Allo_245

#> Allo_256

#> Allo_300

#> Allo_400

#> Benz_50

#> Benz_139

#> Benz_143

#> Benz_200

#> Febu_40

#> Febu_80

#> Febu_120

#> RDEA_100

#> RDEA_200

#> RDEA_400

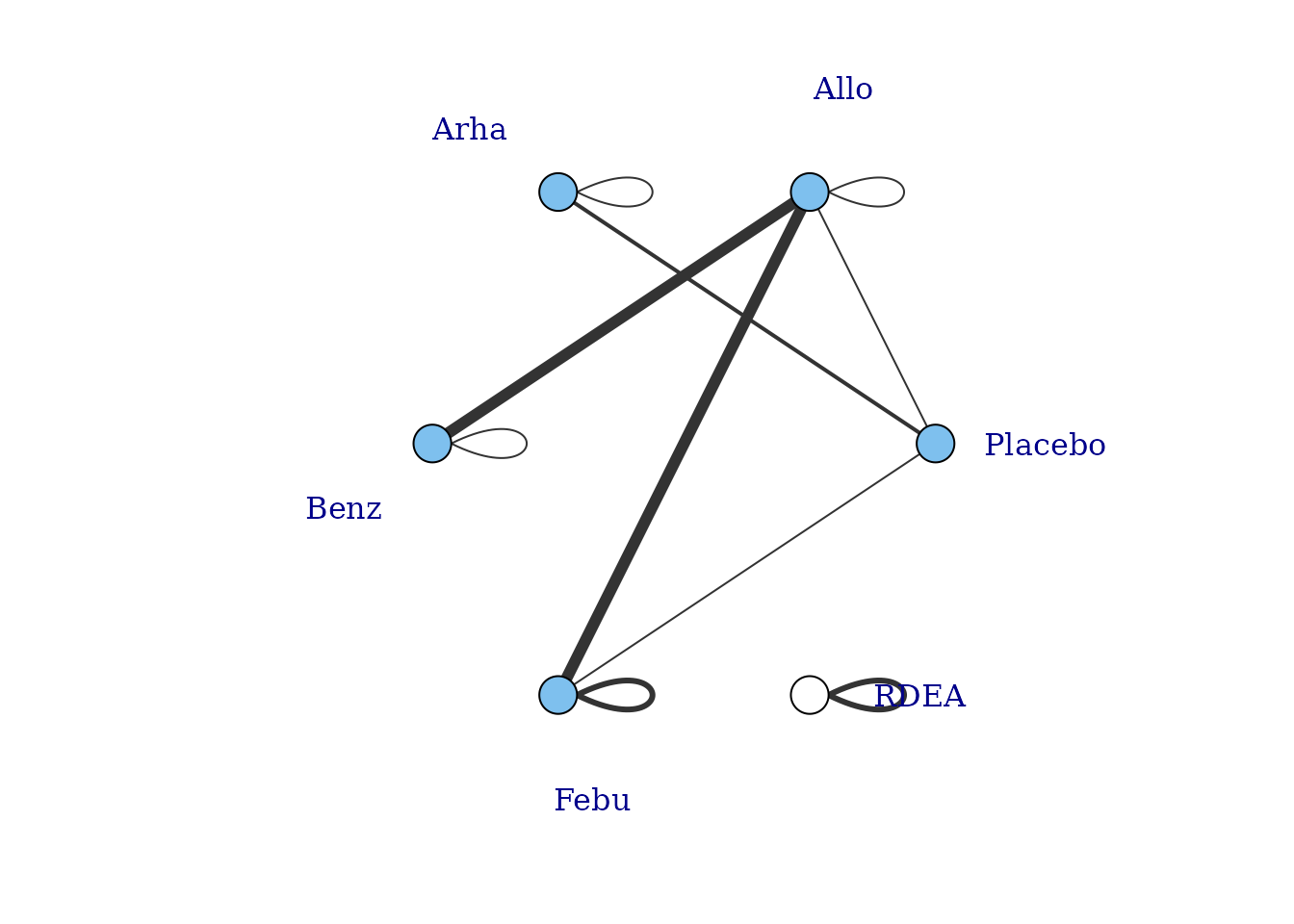

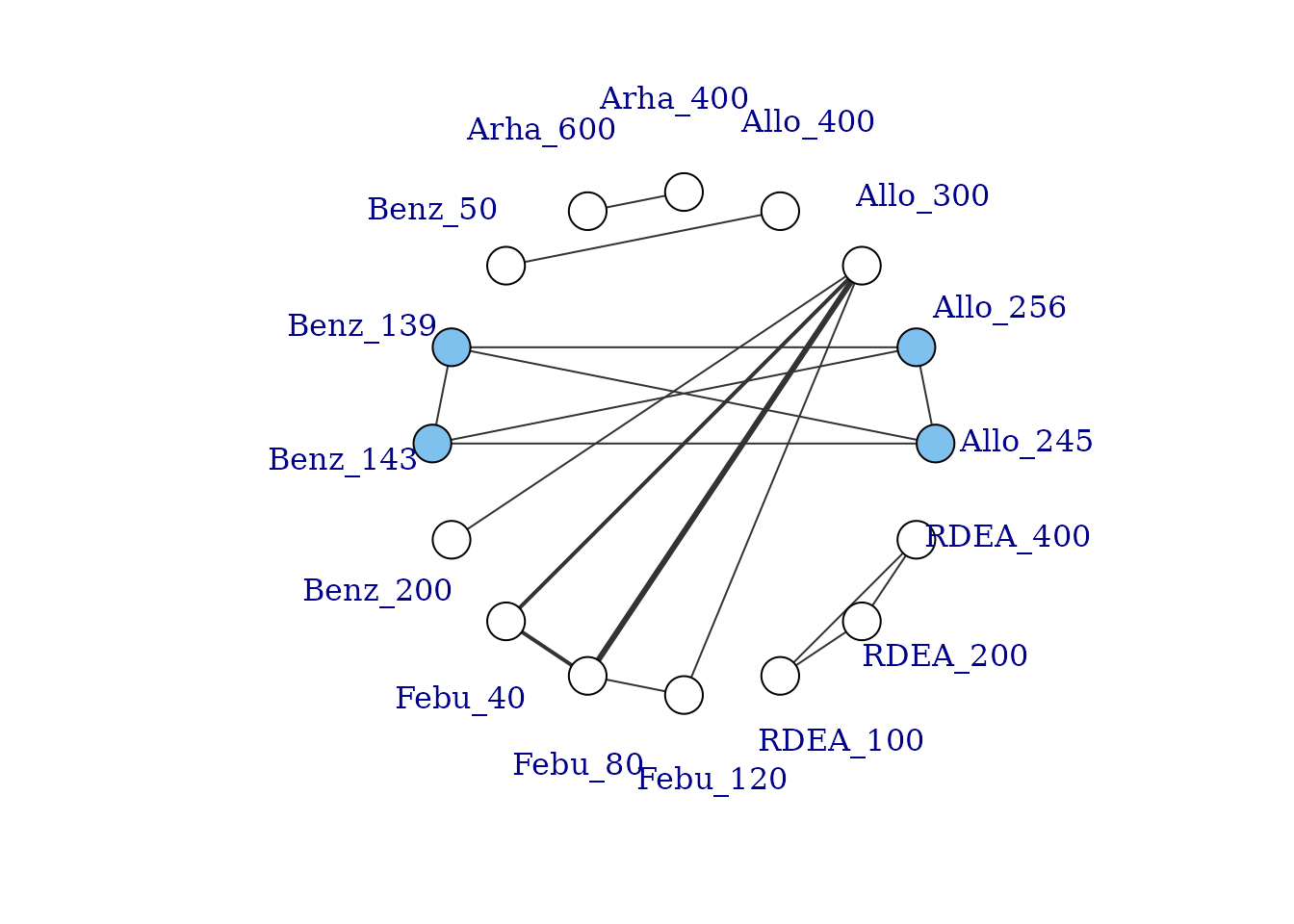

However, whilst at the treatment-level (specific dose of a specific agent), many of these vertices are disconnected, at the agent level they are connected (via different doses of the same agent), meaning that via the dose-response relationship it is possible to estimate results.

# Plot at the agent-level

plot(goutnet, level="agent", label.distance = 6)

#> Warning in check.network(g): The following treatments/agents are not connected

#> to the network reference:

#> RDEA

One agent (RDEA) is still not connected to the network, but

MBNMAdose allows agents to connect via a placebo response

even if they do not include placebo in a head-to-head trial

(see [Linking disconnected treatments via the dose-response

relationship]).

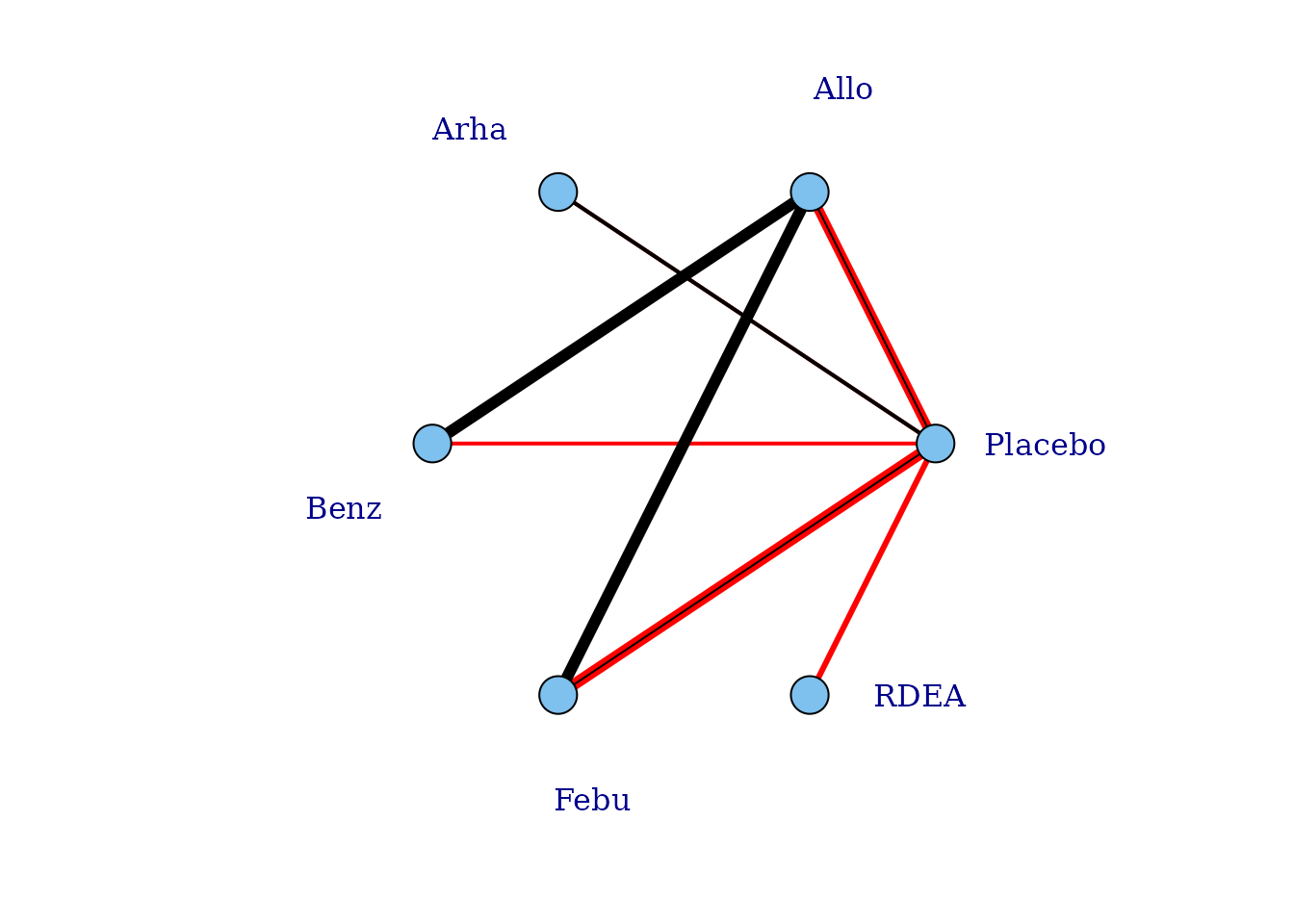

# Plot connections to placebo via a two-parameter dose-response function (e.g. Emax)

plot(goutnet, level="agent", doselink = 2, remove.loops = TRUE, label.distance = 6)

#> Dose-response connections to placebo plotted based on a dose-response

#> function with 1 degrees of freedom

It is also possible to plot a network at the treatment level but to colour the doses by the agent that they belong to.

# Colour vertices by agent

plot(goutnet, v.color = "agent", label.distance = 5)

#> Warning in check.network(g): The following treatments/agents are not connected

#> to the network reference:

#> Allo_245

#> Allo_256

#> Allo_300

#> Allo_400

#> Benz_50

#> Benz_139

#> Benz_143

#> Benz_200

#> Febu_40

#> Febu_80

#> Febu_120

#> RDEA_100

#> RDEA_200

#> RDEA_400

Several further options exist to allow for inclusion of disconnected treatments, such as assuming some sort of common effect among agents within the same class. This is discussed in more detail later in the vignette.

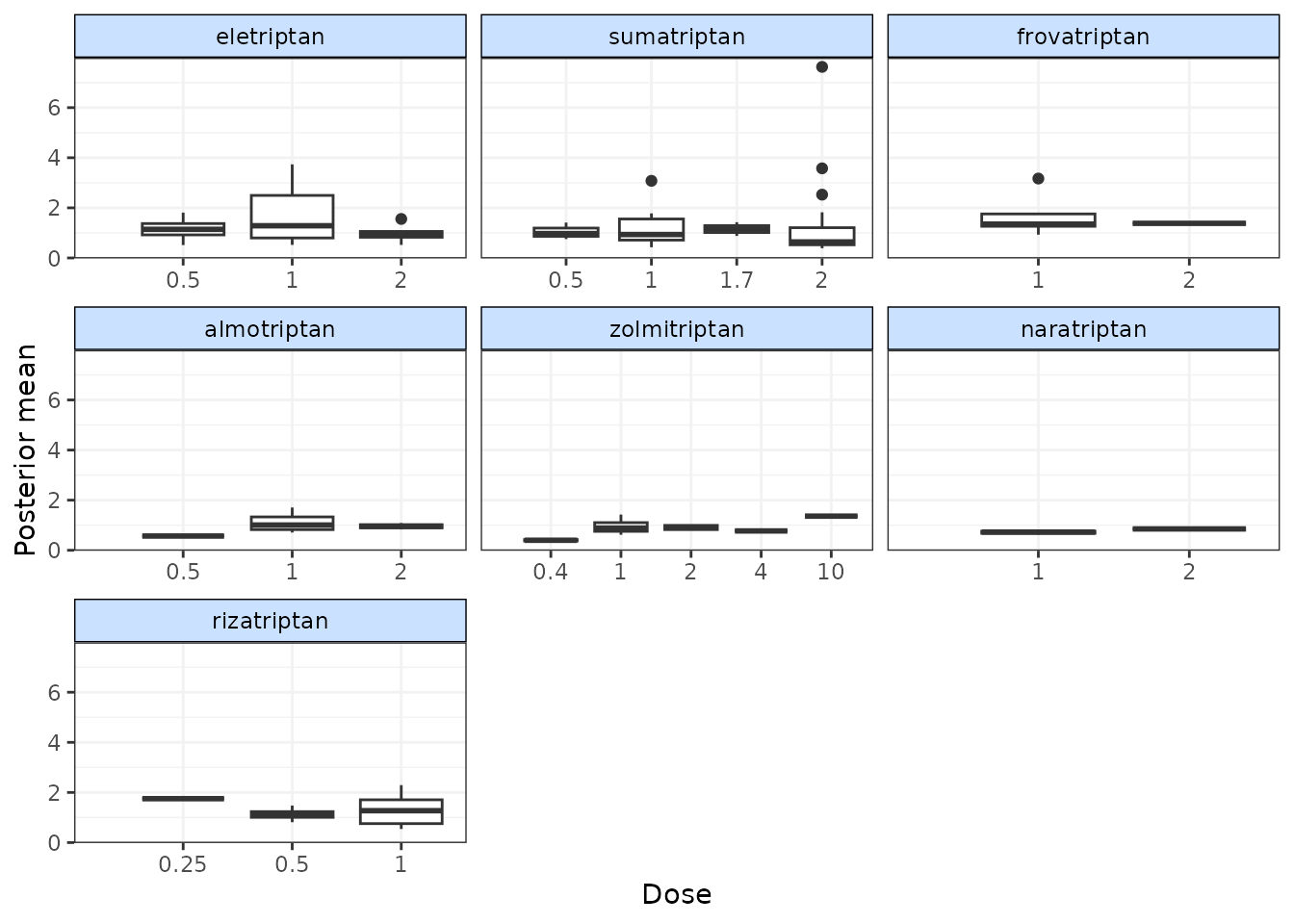

Examining the dose-response relationship

In order to consider which functional forms may be appropriate for

modelling the dose-response relationship, it is useful to look at

results from a “split” network meta-analysis (NMA), in which each dose

of an agent is considered as separate and unrelated (i.e. we are not

assuming any dose-response relationship). The nma.run()

function performs a simple NMA, and by default it drops studies that are

disconnected at the treatment-level (since estimates for these will be

very uncertain if included).

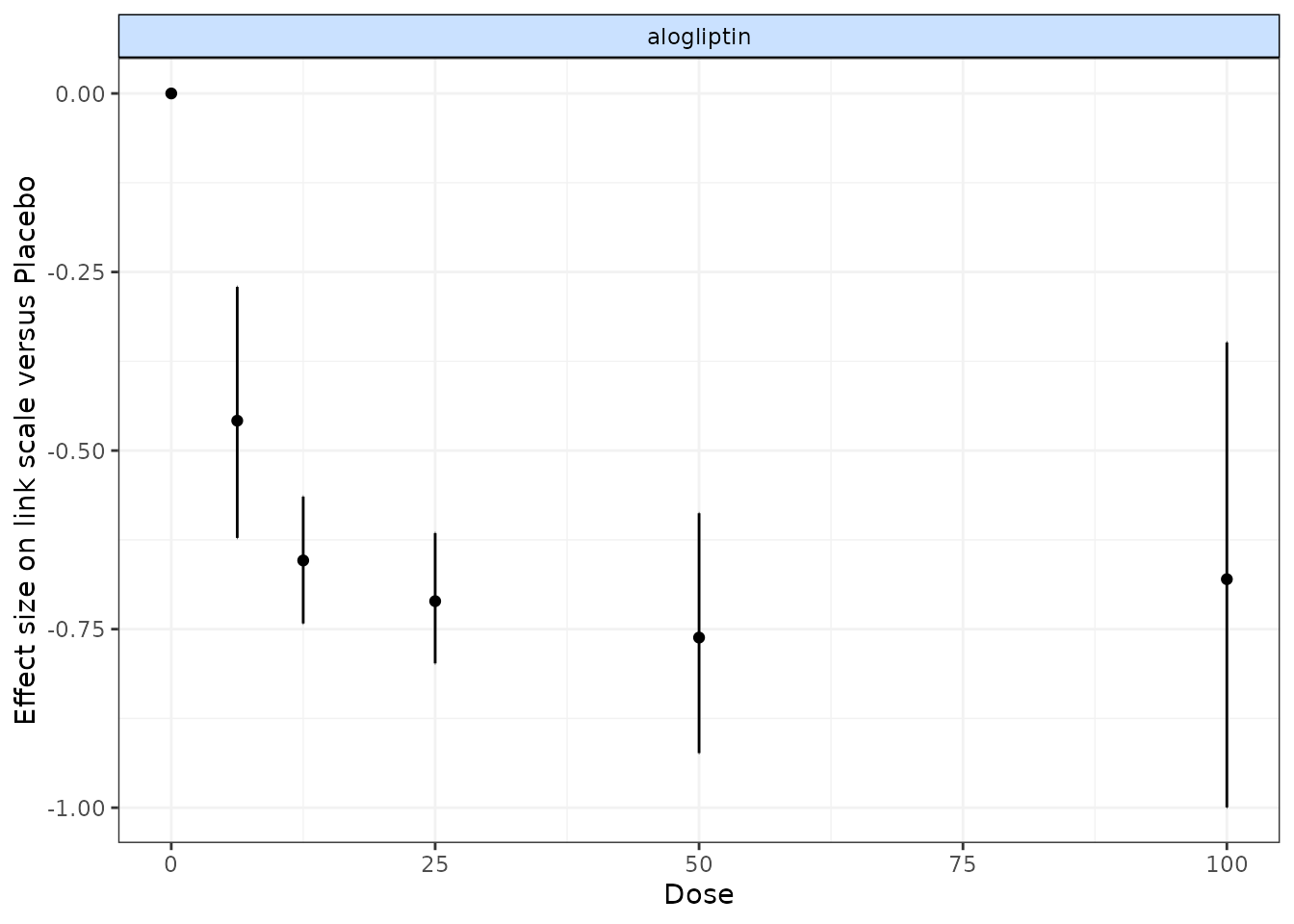

# Run a random effect split NMA using the alogliptin dataset

alognet <- mbnma.network(alog_pcfb)

nma.alog <- nma.run(alognet, method="random")

print(nma.alog)

#> $jagsresult

#> Inference for Bugs model at "/tmp/Rtmp0EKdhX/file1e2776a09d5a", fit using jags,

#> 3 chains, each with 20000 iterations (first 10000 discarded), n.thin = 10

#> n.sims = 3000 iterations saved

#> mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat

#> d[1] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000

#> d[2] -0.456 0.090 -0.622 -0.516 -0.458 -0.397 -0.271 1.001

#> d[3] -0.654 0.045 -0.742 -0.683 -0.654 -0.624 -0.564 1.001

#> d[4] -0.710 0.046 -0.798 -0.740 -0.711 -0.680 -0.616 1.001

#> d[5] -0.760 0.084 -0.924 -0.814 -0.762 -0.706 -0.588 1.001

#> d[6] -0.679 0.166 -1.000 -0.788 -0.680 -0.569 -0.349 1.001

#> sd 0.123 0.028 0.076 0.104 0.121 0.140 0.183 1.003

#> totresdev 46.905 9.631 29.785 40.186 46.327 53.143 67.391 1.002

#> deviance -124.409 9.631 -141.529 -131.128 -124.987 -118.171 -103.923 1.002

#> n.eff

#> d[1] 1

#> d[2] 3000

#> d[3] 3000

#> d[4] 3000

#> d[5] 3000

#> d[6] 2400

#> sd 960

#> totresdev 1300

#> deviance 1100

#>

#> For each parameter, n.eff is a crude measure of effective sample size,

#> and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

#>

#> DIC info (using the rule, pD = var(deviance)/2)

#> pD = 36.8 and DIC = -88.2

#> DIC is an estimate of expected predictive error (lower deviance is better).

#>

#> $trt.labs

#> [1] "Placebo_0" "alogliptin_6.25" "alogliptin_12.5" "alogliptin_25"

#> [5] "alogliptin_50" "alogliptin_100"

#>

#> $UME

#> [1] FALSE

#>

#> attr(,"class")

#> [1] "nma"

# Draw plot of NMA estimates plotted by dose

plot(nma.alog)

In the alogliptin dataset there appears to be a dose-response relationship, and it also appears to be non-linear.

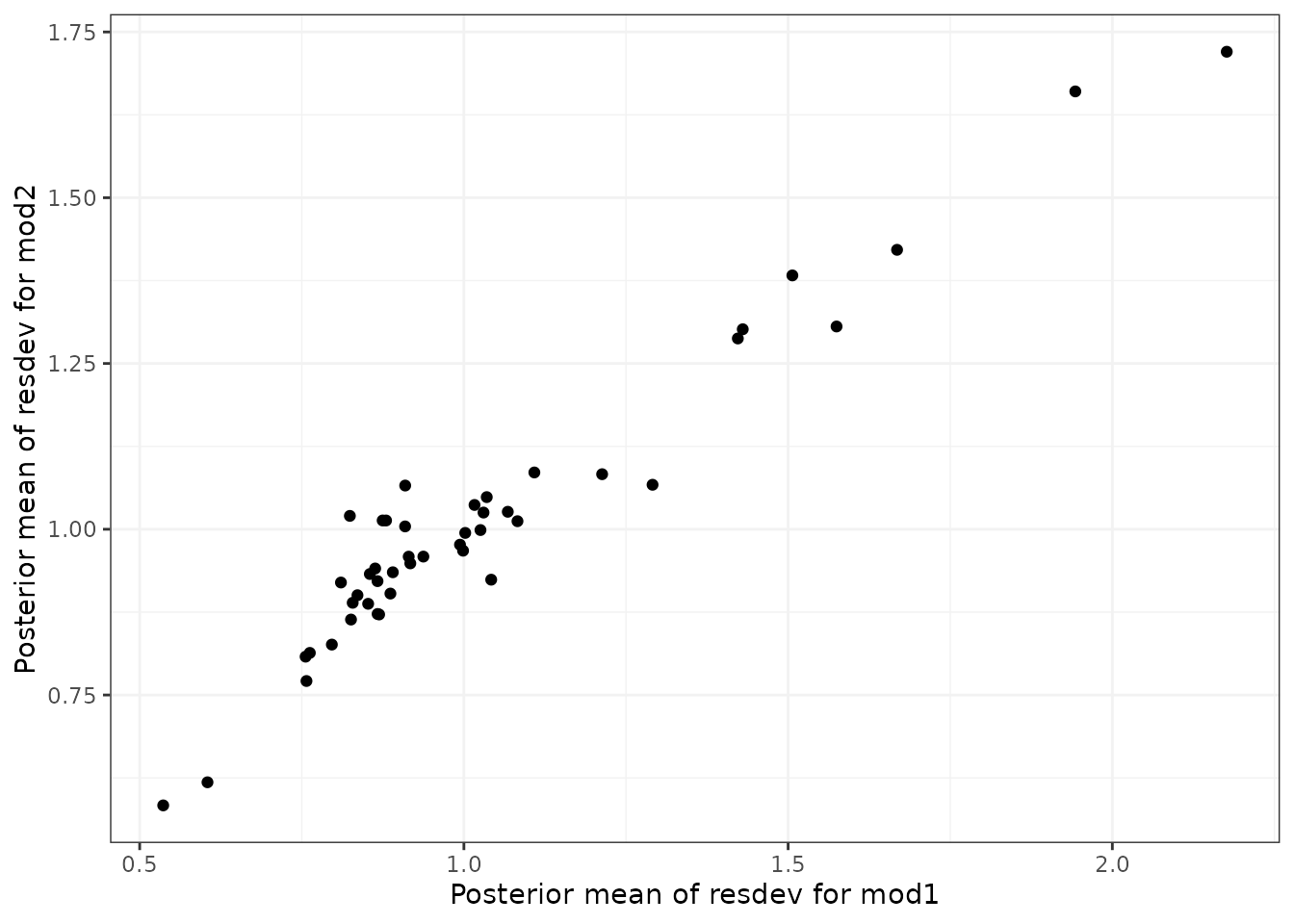

One additional use of nma.run() is that is can be used

after fitting an MBNMA to ensure that fitting a dose-response function

is not leading to poorer model fit than when conducting a conventional

NMA. Comparing the total residual deviance between NMA and MBNMA models

is useful to identify if introducing a dose-response relationship is

leading to poorer model fit. However, it is important to note that if

treatments are disconnected in the NMA and have been dropped

(drop.discon=TRUE), there will be fewer observations

present in the dataset, which will subsequently lead to lower pD and

lower residual deviance, meaning that model fit statistics from NMA and

MBNMA may not be directly comparable.

Analysis using mbnma.run()

MBNMA is performed in MBNMAdose by applying

mbnma.run(). A "mbnma.network" object must be

provided as the data for mbnma.run(). The key arguments

within mbnma.run() involve specifying the functional form

used to model the dose-response, and the dose-response parameters that

comprise that functional form.

Dose-response functions

Various functional forms are implemented within

MBNMAdose, that allow a variety of parameterizations and

dose-response shapes. These are provided as an object of class

"dosefun" to the fun argument in

mbnma.run(). The interpretation of the dose-response

parameter estimates will therefore depend on the dose-response function

used. In previous versions of MBNMAdose (prior to version

0.4.0), wrapper functions were provided for each of the commonly used

dose-response functions in mbnma.run(). For example,

mbnma.emax() is equivalent to

mbnma.run(fun=demax()). This will be deprecated in future

versions.

For the following functions \(x_{i,k}\) refers to the dose and \(t_{i,k}\) to the agent in arm \(k\) of study \(i\).

Log-linear (

dloglin()): \(f(x_{i,k}, t_{i,k})=\lambda_{t_{i,k}} \times ln(x_{i,k} + 1)\) where \(lambda\) controls the gradient of the dose-response relationship.Exponential (

dexp()): \(f(x_{i,k}, t_{i,k})=Emax_{t_{i,k}} (1 - e^{-x_{i,k}})\) where \(Emax\) is the maximum efficacy of an agent.Emax (

demax()): \(f(x_{i,k}, t_{i,k})=\dfrac{Emax_{t_{i,k}} \times {x_{i,k}^{\gamma_{t_{i,k}}}}} {ED50_{t_{i,k}}^{\gamma_{t_{i,k}}} + x_{i,k}^{\gamma_{t_{i,k}}}}\) where \(Emax\) is the maximum efficacy that can be achieved, \(ED50\) is the dose at which 50% of the maximum efficacy is achieved, and \(\gamma\) is the Hill parameter that controls the sigmoidal shape of the function. By default,demax()fits a 2-parameter Emax function in which \(\gamma_{t_{i,k}}=1\) (hill=NULLin function argument).Polynomial (e.g. linear) (

dpoly()): \(f(x_{i,k}, t_{i,k})=\beta_{1_{t_{i,k}}}x_{i,k}+...+\beta_{p_{t_{i,k}}}x^p_{i,k}\) where \(p\) is the degree of the polynomial (e.g. 1 for linear) and \(\beta_p\) are the coefficientsFractional polynomial (

dfpoly()): \(f(x_{i,k}, t_{i,k})=\beta_{1_{t_{i,k}}}x_{i,k}^{\gamma_1}+...+\beta_{p_{t_{i,k}}}x^{\gamma_p}_{i,k}\) where \(p\) is the degree of the polynomial, \(\beta_p\) are the coefficients, and \(x_{i,k}^{\gamma_p}\) is a regular power except where \(\gamma_p=0\) where \({x_{i,k}^{(0)}=ln(x_{i,k})}\). If a fractional polynomial power \({\gamma_p}\) repeats within the function it is multiplied by another \({ln(x_{i,k})}\).Spline functions (

dspline()): B-splines (type="bs"), natural cubic splines (type="ns") and piecewise linear splines (type="ls") can be fitted. \(f(x_{i,k}, t_{i,k})=\sum_{p=1}^{P} \beta_{p,t_{i,k}} X_{p,i,k}\) where \(\beta_{p,t_{i,k}}\) is the regression coefficient for the \(p^{th}\) spline and \(X_{1:P,i,k}\) is the basis matrix for the spline, defined by the spline type.Non-parametric monotonic function (

dnonparam()): Follows the approach of Owen et al. (2015). Thedirectioncan be specified as"increasing"or"decreasing".User-defined function (

duser()): Any function that can be explicitly defined by the user within (see User-defined dose-response function)Agent-specific functions (

dmulti()): Allows for a separate dose-response function to be fitted to each agent in the network (see Agent-specific dose-response functions)

Dose-response parameters

Dose-response parameters can be specified in different ways which affects the key parameters estimated by the model and implies different modelling assumptions. Three different specifications are available for each parameter:

-

"rel"indicates that relative effects should be pooled for this dose-response parameter separately for each agent in the network. This preserves randomisation within included studies and is likely to vary less between studies (only due to effect modification). -

"common"indicates that a single absolute value for this dose-response parameter should be estimated across the whole network that does not vary by agent. This is particularly useful for parameters expected to be constant (e.g. Hill parameters indemax()or fractional polynomial power parameters infpoly()). -

"random"indicates that a single absolute value should be estimated separately for each agent, but that all the agent values vary randomly around a single mean absolute network effect. It is similar to"common"but makes slightly less strong assumptions. -

numeric()Assigned a numeric value - this is similar to assigning"common", but the single absolute value is assigned as a numeric value by the user, rather than estimated from the data.

In mbnma.run(), an additional argument,

method, indicates what method to use for pooling relative

effects and can take either the values "common", implying

that all studies estimate the same true effect (akin to a “fixed effect”

meta-analysis), or "random", implying that all studies

estimate a separate true effect, but that each of these true effects

vary randomly around a true mean effect. This approach allows for

modelling of between-study heterogeneity.

If relative effects ("rel") are modelled on more than

one dose-response parameter then by default, a correlation will be

assumed between the dose-response parameters, which will typically

improve estimation (provided the parameters are correlated…they usually

are). This can be prevented by setting cor=FALSE.

Output

mbnma.run() returns an object of class

c("rjags", "mbnma"). summary() provides

posterior medians and 95% credible intervals (95%CrI) for different

parameters in the model, naming them by agent and giving some

explanation of the way they have been specified in the model.

print() can also be used to give full summary statistics of

the posterior distributions for monitored nodes in the JAGS model.

Estimates are automatically reported for parameters of interest

depending on the model specification (unless otherwise specified in

parameters.to.save).

Dose-response parameters will be named in the output, with a separate

coefficient for each agent (if specified as "rel"). If

class effects are modelled, parameters for classes are represented by

the upper case name of the dose-response parameter they correspond to

(e.g. EMAX will be the class effects on emax).

The SD of the class effect (e.g. sd.EMAX,

sd.BETA.1) is the SD of agents within a class for the

dose-response parameter they correspond to.

sd corresponds to the between-study SD. However,

sd. followed by a dose-response parameter name

(e.g. sd.emax, sd.beta.1) is the between-agent

SD for dose response parameters modeled using "common" or

"random".

totresdev is the residual deviance, and

deviance the deviance of the model. Model fit statistics

for pD (effective number of parameters) and

DIC (Deviance Information Criterion) are also reported,

with an explanation as to how they have been calculated.

Examples

An example MBNMA of the triptans dataset using an Emax dose-response function and common treatment effects that pool relative effects for each agent separately on both Emax and ED50 parameters follows:

# Run an Emax dose-response MBNMA

mbnma <- mbnma.run(tripnet, fun=demax(emax="rel", ed50="rel"),

method="random")

#> 'ed50' parameters are on exponential scale to ensure they take positive values on the natural scale

#> `likelihood` not given by user - set to `binomial` based on data provided

#> `link` not given by user - set to `logit` based on assigned value for `likelihood`

# Print neat summary of output

summary(mbnma)

#> ========================================

#> Dose-response MBNMA

#> ========================================

#>

#> Dose-response function: emax

#>

#> Pooling method

#>

#> Method: Random effects estimated for relative effects

#>

#> Parameter Median (95%CrI)

#> -----------------------------------------------------------------------

#> Between-study SD for relative effects 0.283 (0.188, 0.386)

#>

#>

#> emax dose-response parameter results

#>

#> Pooling: relative effects for each agent

#>

#> |Agent |Parameter | Median| 2.5%| 97.5%|

#> |:------------|:---------|-------:|--------:|-------:|

#> |eletriptan |emax[2] | 2.6092| 2.0069| 3.5414|

#> |sumatriptan |emax[3] | 1.7729| 1.2298| 3.0327|

#> |frovatriptan |emax[4] | 1.3439| 0.9102| 9.0463|

#> |almotriptan |emax[5] | 6.3533| 0.9518| 61.9164|

#> |zolmitriptan |emax[6] | 2.0909| 1.1369| 3.7343|

#> |naratriptan |emax[7] | 12.9692| -39.5401| 67.6623|

#> |rizatriptan |emax[8] | 2.8095| 1.4616| 46.6488|

#>

#>

#> ed50 dose-response parameter results

#>

#> Parameter modelled on exponential scale to ensure it takes positive values

#> on the natural scale

#> Pooling: relative effects for each agent

#>

#> |Agent |Parameter | Median| 2.5%| 97.5%|

#> |:------------|:---------|--------:|--------:|-------:|

#> |eletriptan |ed50[2] | -0.6044| -1.6368| 0.1634|

#> |sumatriptan |ed50[3] | -0.5928| -23.7322| 0.7469|

#> |frovatriptan |ed50[4] | -19.8711| -68.6036| 1.9035|

#> |almotriptan |ed50[5] | 1.7861| -35.9229| 4.2992|

#> |zolmitriptan |ed50[6] | -0.3326| -45.6145| 0.8503|

#> |naratriptan |ed50[7] | 4.1384| -43.4636| 55.8782|

#> |rizatriptan |ed50[8] | -0.2863| -11.1133| 3.3577|

#>

#>

#> Model Fit Statistics

#> Effective number of parameters:

#> pD calculated using the Kullback-Leibler divergence = 136.3

#>

#> Deviance = 1092.1

#> Residual deviance = 189

#> Deviance Information Criterion (DIC) = 1228.4In this example the emax parameters are the maximum

efficacy that can be achieved for each agent. The ed50

parameters are the the dose at which 50% of the maximum efficacy is

achieved for each agent. Results for ED50 are given on the log scale as

it is constrained to be greater than zero. sd corresponds

to the between-study SD (included because

method="random").

Instead of estimating a separate relative effect for each agent, a simpler dose-response model that makes stronger assumptions could estimate a single parameter across the whole network for ED50, but still estimate a separate effect for each agent for Emax:

# Emax model with single parameter estimated for Emax

emax <- mbnma.run(tripnet, fun=demax(emax="rel", ed50="common"),

method="random")

#> 'ed50' parameters are on exponential scale to ensure they take positive values on the natural scale

#> `likelihood` not given by user - set to `binomial` based on data provided

#> `link` not given by user - set to `logit` based on assigned value for `likelihood`

summary(emax)

#> ========================================

#> Dose-response MBNMA

#> ========================================

#>

#> Dose-response function: emax

#>

#> Pooling method

#>

#> Method: Random effects estimated for relative effects

#>

#> Parameter Median (95%CrI)

#> -----------------------------------------------------------------------

#> Between-study SD for relative effects 0.243 (0.158, 0.339)

#>

#>

#> emax dose-response parameter results

#>

#> Pooling: relative effects for each agent

#>

#> |Agent |Parameter | Median| 2.5%| 97.5%|

#> |:------------|:---------|------:|------:|------:|

#> |eletriptan |emax[2] | 2.8060| 2.3443| 3.4652|

#> |sumatriptan |emax[3] | 1.8875| 1.6154| 2.2653|

#> |frovatriptan |emax[4] | 2.0853| 1.4105| 2.9391|

#> |almotriptan |emax[5] | 1.7693| 1.3417| 2.2986|

#> |zolmitriptan |emax[6] | 2.0913| 1.6754| 2.6840|

#> |naratriptan |emax[7] | 1.0183| 0.4332| 1.6720|

#> |rizatriptan |emax[8] | 2.6959| 2.1921| 3.4358|

#>

#>

#> ed50 dose-response parameter results

#>

#> Parameter modelled on exponential scale to ensure it takes positive values

#> on the natural scale

#> Pooling: single parameter across all agents in the network

#>

#> |Parameter | Median| 2.5%| 97.5%|

#> |:---------|-------:|-------:|------:|

#> |ed50 | -0.3932| -0.8875| 0.0902|

#>

#>

#> Model Fit Statistics

#> Effective number of parameters:

#> pD calculated using the Kullback-Leibler divergence = 122.5

#>

#> Deviance = 1093.7

#> Residual deviance = 190.6

#> Deviance Information Criterion (DIC) = 1216.2In this example ed50 only has a single parameter, which

corresponds to the dose at which 50% of the maximum efficacy is

achieved, assumed to be equal across all agents in the network.

Parameter interpretation

Parameter interpretation depends both on the link scale on which the outcome is modeled, and on the dose-response function used in the model.

For example for a binomial outcome modeled using a logit link

function and an Emax dose-response function, the emax

parameter represents the maximum efficacy on the logit scale - it is

modeled on the outcome scale and hence is dependent on the link

function. As indicated in the help file (?demax()), the

ed50 parameter is modeled on the log-scale to ensure that

it takes positive values on the natural scale, but is not modeled on the

outcome scale so is not dependent on the link function. Therefore it can

be interpreted as the log-dose at which 50% of the maximum efficacy is

achieved. The hill parameter is also modeled on the

log-scale, and it can be interpreted as a log-value that controls the

sigmoidicity of the dose-response function for the outcome on the logit

scale.

For a continuous outcome modeled using link="smd",

whilst not a true link function it implies modelling Standardised Mean

Differences (SMD). For a linear dose-response function

(dpoly(degree=1)), beta.1 represents the

change in SMD for each additional unit of dose. For a quadratic

dose-response function (dpoly(degree=2)),

beta.2 represents the change in beta.1 for

each additional unit of dose.

With some dose-response functions (e.g. splines, fractional

polynomials) parameter interpretation can be challenging. The

get.relative() function can make this easier as this allows

relative effects to be estimated between agents at specific doses, which

is typically much more easily understandable and presentable (see Estimating relative

effects).

Additional arguments for mbnma.run()

Several additional arguments can be given to mbnma.run()

that require further explanation.

Link functions for likelihood=="normal"

For data with normal likelihood, users are likely to typically

analyse data using an identity link function, the default given to the

link argument in mbnma.run() when

likelihood="normal". This assumes an additive treatment

effect (e.g. mean difference).

However, by specifying link="log" a user can model a log

link and therefore assume a multiplicative treatment effect. For

continuous data this models the treatment effect as a Ratio of Means

(RoM) (Friedrich, Adhikari, and Beyene

2011). This also provides an advantage as the treatment effect is

scale independent (i.e. studies measuring the same outcome using

different measurement scales can be analysed simultaneously). However,

within-study treatment effects must all be of the same direction (either

positive or negative), and change from baseline measures must be

adjusted so that they are also expressed as RoMs (log(follow-up) -

log(baseline)) to avoid combining additive and multiplicative

assumptions within the same analysis.

An alternative approach for modelling a measurement scale-independent

treatment effect whilst still assuming additive treatment effects is to

perform the analysis using Standardised Mean Differences (SMD). Whilst

not strictly a different link function, this can be specified using

link="smd". Currently, MBNMAdose standardises

treatment effects using the pooled standard deviation (SD) in each

study. The resulting treatment effects are reported in units of SD as

SMDs.

A more robust approach to minimise bias from estimation of

within-study SD would be to use a single reference SD for

standardisation of each scale included in the dataset. This is something

MBNMAdose will incorporate in the future. For further

details of analysis of continuous data that include discussion of both

RoM and SMD see (Daly et al. 2021).

Class effects

Similar effects between agents within the network can be modelled using class effects. This requires assuming that different agents have some sort of common class effect, perhaps due to similar mechanisms of action. Advantages of this is that class effects can be used to connect agents that might otherwise be disconnected from the network, and they can also provide additional information on agents that might otherwise have insufficient data available to estimate a desired dose-response. The drawback is that this requires making additional assumptions regarding similarity of efficacy.

One difficult of this modelling aspect in particular is that the scales for dose-response parameters must be the same across different agents within a class for this assumption to be valid. For example, in an Emax model it may be reasonable to assume a class effect on the Emax parameter, as this is parameterised on the response scale and so could be similar across agents of the same class. However, the ED50 parameter is parameterised on the dose scale, which is likely to differ for each agent and so an assumption of similarity between agents for this parameter may be less valid. One way to try to account for this issue and make dose scales more consistent across agents is to standardise doses for each agent relative to its “common” dose (see Thorlund et al. (thorlund2015?)), though we expect that this may lead to bias if the common dose is located at a different point along the dose-response curve.

Class effects can only be applied to dose-response parameters which

vary by agent. In mbnma.run() they are supplied as a list,

in which each element is named following the name of the corresponding

dose-response parameter as defined in the dose-response function. The

names will therefore differ when using wrapper functions for

mbnma.run(). The class effect for each dose-response

parameter can be either "common", in which the effects for

each agent within the same class are constrained to a common class

effect, or "random", in which the effects for each agent

within the same class are assumed to be randomly distributed around a

shared class mean.

When working with class effects in MBNMAdose a variable

named class must be included in the original data frame

provided to mbnma.network(). Below we assign a class for

two similar agents in the dataset - Naproxcinod and Naproxen. We will

estimate separate effects for all other agents, so we set their classes

to be equal to their agents.

# Using the osteoarthritis dataset

pain.df <- osteopain

# Set class equal to agent for all agents

pain.df$class <- pain.df$class

# Set a shared class (NSAID) only for Naproxcinod and Naproxen

pain.df$class[pain.df$agent %in% c("Naproxcinod", "Naproxen")] <-

"NSAID"

# Run a restricted cubic spline MBNMA with a random class effect on beta.1

classnet <- mbnma.network(pain.df)

splines <- mbnma.run(classnet, fun=dspline(type="bs", knots=2), class.effect = list(beta.1="random"))Mean class effects are given in the output as

D.ed50/D.1 parameters. These can be

interpreted as the effect of each class for Emax parameters

(beta.1). Note the number of D.ed50 parameters

is therefore equal to the number of classes defined in the dataset.

If we had specified that the class effects were

"random", each treatment effect for Emax

(beta.1) would be assumed to be randomly distributed around

its class mean with SD given in the output as

sd.D.ed50/sd.D.1.

Mean class effects are represented in the output by the upper case

name of the dose-response parameter they correspond to. In this case,

BETA.1 is the class effects on beta.1, the

first spline coefficient. The SD of the class effect is the SD of agents

within a class for the dose-response parameter they correspond to. In

this case sd.BETA.1 is the within-class SD for

beta.1.

User-defined dose-response function

Users can define their own dose-response function using

duser() rather than using one of the functions provided in

MBNMAdose. The dose-response is specified in terms of

beta parameters and dose. This allows a huge

degree of flexibility when defining the dose-response relationship.

The function assigned needs to fulfil a few criteria to be valid: *

dose must always be included in the function * At least one

beta dose-response parameter must be specified, up to a

maximum of four. These must always be named beta.1,

beta.2, beta.3 and beta.4, and

must be included sequentially (i.e. don’t include beta.3 if

beta.2 is not included) * Indices used by JAGS should

not be added (e.g. use dose rather than

dose[i,k]) * Any mathematical/logical operators that can be

implemented in JAGS can be added to the function

(e.g. exp(), ifelse()). See the JAGS manual

(2017) for further details.

# Using the depression SSRI dataset

depnet <- mbnma.network(ssri)

# An example specifying a quadratic dose-response function

quadfun <- ~ (beta.1 * dose) + (beta.2 * (dose^2))

quad <- mbnma.run(depnet, fun=duser(fun=quadfun, beta.1 = "rel", beta.2 = "rel"))|Agent-specific dose-response functions

Different dose-response functions can be used for different agents within the network. This allows for the modelling of more complex dose-response functions in agents for which there are many doses available, and less complex functions in agents for which there are fewer doses available. Note that these models are typically less computationally stable than single dose-response function models, and they are likely to benefit less from modelling correlation between multiple dose-response parameters since there are fewer agents informing correlations between each dose-response parameter.

This can be modeled using the dmulti() dose-response

function and assigning a list of objects of class "dosefun"

to it. Each element in the list corresponds to an agent in the network

(the order of which should be the same as the order of agents in the

"mbnma.network" object). A dose-response function for

Placebo should be included in the list, though which function is used is

typically irrelevant since evaluating the function at dose=0 will

typically equal 0.

# Using the depression SSRI dataset

depnet <- mbnma.network(ssri)

dr.funs <- dmulti(list(

"Placebo"=dpoly(degree=2),

"citalopram"=dpoly(degree=2),

"escitalopram"=dpoly(degree=2),

"fluoxetine"=dspline(type="ns",knots=2),

"paroxetine"=dpoly(degree=2),

"sertraline"=dspline(type="ns",knots=2)

))

multifun <- mbnma.run(depnet, fun=dr.funs, method="common", n.iter=50000)

summary(multifun)Because an MBNMA model with a linear dose-response function

(dpoly(degree=1)) is mathematically equivalent to a

standard NMA model, using agent-specific dose-response functions allows

analysis of datasets that both include multiple doses of different drugs

and interventions for which a dose-response relationship is not

realistic (e.g. surgery) or difficult to assume (e.g. psychotherapy,

exercise interventions). Interventions without a dose-response

relationship can be coded in the dataset as different agents, each of

which should be assigned a dose of 1, and these can then be modeled

using a linear dose-response relationship, whilst agents with a

plausible dose-response can be assigned a function that appropriately

captures their dose-response relationship.

Splines and knots

For a more flexible dose-response shape, various different splines

can be fitted to the data by using dspline(). This model is

very flexible and can allow for a variety of non-monotonic dose-response

relationships, though parameters can be difficult to interpret.

To fit this model, the number/location of knots (the

points at which the different spline pieces meet) should be specified.

If a single number is given, it represents the the number of knots to be

equally spaced across the dose range of each agent. Alternatively

several probabilities can be given that represent the quantiles of the

dose range for each agent at which knots should be located.

Correlation between dose-response parameters

mbnma.run() automatically models correlation between

relative effects dose-response parameters. This can be prevented by

specifying cor=FALSE in mbnma.run(). The

correlation is modeled using a vague Wishart prior, but this can be made

more informative by specifying a scale matrix for the prior. This

corresponds to the expectation of the Wishart prior. A different scale

matrix can be given to the model in omega. Each row of the

scale matrix corresponds to the 1st, 2nd, 3rd, etc. dose-response

parameter that has been modeled using relative effects (as specified in

the dose-response function).

Priors

Default vague priors for the model are as follows:

\[ \begin{aligned} &d_{p,a} \sim N(0,10000)\\ &beta_{p} \sim N(0,10000)\\ &\sigma \sim N(0,400) \text{ limited to } x \in [0,\infty]\\ &\sigma_{p} \sim N(0,400) \text{ limited to } x \in [0,\infty]\\ &D_{p,c} \sim N(0,1000)\\ &\sigma^D_{p} \sim N(0,400) \text{ limited to } x \in [0,\infty]\\ \end{aligned} \]

…where \(p\) is an identifier for the dose-response parameter (e.g. 1 for Emax and 2 for ED50), \(a\) is an agent identifier and \(c\) is a class identifier.

Users may wish to change these, perhaps in order to use more/less informative priors, but also because the default prior distributions in some models may lead to errors when compiling/updating models.

If the model fails during compilation/updating (i.e. due to a problem

in JAGS), mbnma.run() will generate an error and return a

list of arguments that mbnma.run() used to generate the

model. Within this (as within a model that has run successfully), the

priors used by the model (in JAGS syntax) are stored within

"model.arg":

print(mbnma$model.arg$priors)

#> $mu

#> [1] "dnorm(0,0.001)"

#>

#> $ed50

#> [1] "dnorm(0,0.001)"

#>

#> $emax

#> [1] "dnorm(0,0.001)"

#>

#> $sd

#> [1] "dunif(0, 6.021)"In this way a model can first be run with vague priors and then rerun with different priors, perhaps to allow successful computation, perhaps to provide more informative priors, or perhaps to run a sensitivity analysis with different priors. Increasing the precision of prior distributions only a little can also often improve convergence considerably.

To change priors within a model, a list of replacements can be

provided to priors in mbnma.run(). The name of

each element is the name of the parameter to change (without indices)

and the value of the element is the JAGS distribution to use for the

prior. This can include censoring or truncation if desired. Only the

priors to be changed need to be specified - priors for parameters that

aren’t specified will take default values.

For example, if we want to use tighter priors for the half-normal SD parameters we could increase the precision:

pD (effective number of parameters)

The default value in for pd in mbnma.run()

is "pv", which uses the value automatically calculated in

the R2jags package as pv = var(deviance)/2.

Whilst this is easy to calculate, it is numerically less stable than

pD and may perform more poorly in certain conditions (Gelman, Hwang, and Vehtari 2014).

A commonly-used approach for calculating pD is the plug-in method

(pd="plugin") (Spiegelhalter et al.

2002). However, this can sometimes result in negative

non-sensical values due to skewed posterior distributions for deviance

contributions that can arise when fitting non-linear models.

Another approach that is more reliable than the plug-in method when

modelling non-linear effects is using the Kullback-Leibler divergence

(pd="pd.kl") (Plummer 2008).

The disadvantage of this approach is that it requires running additional

MCMC iterations, so can be slightly slower to calculate.

Finally, pD can also be calculated using an optimism adjustment

(pd="popt") which allows for calculation of the penalized

expected deviance (Plummer 2008). This

adjustment allows for the fact that data used to estimate the model is

the same as that used to assess its parsimony. It also requires running

additional MCMC iterations.

Arguments to be sent to JAGS

In addition to the arguments specific to mbnma.run() it

is also possible to use any arguments to be sent to

R2jags::jags(). Most of these are likely to relate to

improving the performance of MCMC simulations in JAGS and may help with

parameter convergence (see [Convergence]). Some of the key arguments

that may be of interest are:

-

n.chainsThe number of Markov chains to run (default is 3) -

n.iterThe total number of iterations per MCMC chain -

n.burninThe number of iterations that are discarded to ensure iterations are only saved once chains have converged -

n.thinThe thinning rate which ensures that results are only saved for 1 in everyn.thiniterations per chain. This can be increased to reduce autocorrelation

Connecting networks via the dose-response relationship

One of the strengths of dose-response MBNMA is that it allows treatments to be connected in a network that might otherwise be disconnected, by linking up different doses of the same agent via the dose-response relationship (Pedder, Dias, Bennetts, et al. 2021). To illustrate this we can generate a version of the gout dataset which excludes placebo (to artificially disconnect the network):

# Generate dataset without placebo

noplac.gout <-

gout[!gout$studyID %in% c(2001, 3102),] # Drop two-arm placebo studies

noplac.gout <-

noplac.gout[noplac.gout$agent!="Plac",] # Drop placebo arm from multi-arm studies

# Create mbnma.network object

noplac.net <- mbnma.network(noplac.gout)

# Plot network

plot(noplac.net, label.distance=5)

#> Warning in check.network(g): The following treatments/agents are not connected

#> to the network reference:

#> Allo_300

#> Allo_400

#> Arha_400

#> Arha_600

#> Benz_50

#> Benz_200

#> Febu_40

#> Febu_80

#> Febu_120

#> RDEA_100

#> RDEA_200

#> RDEA_400

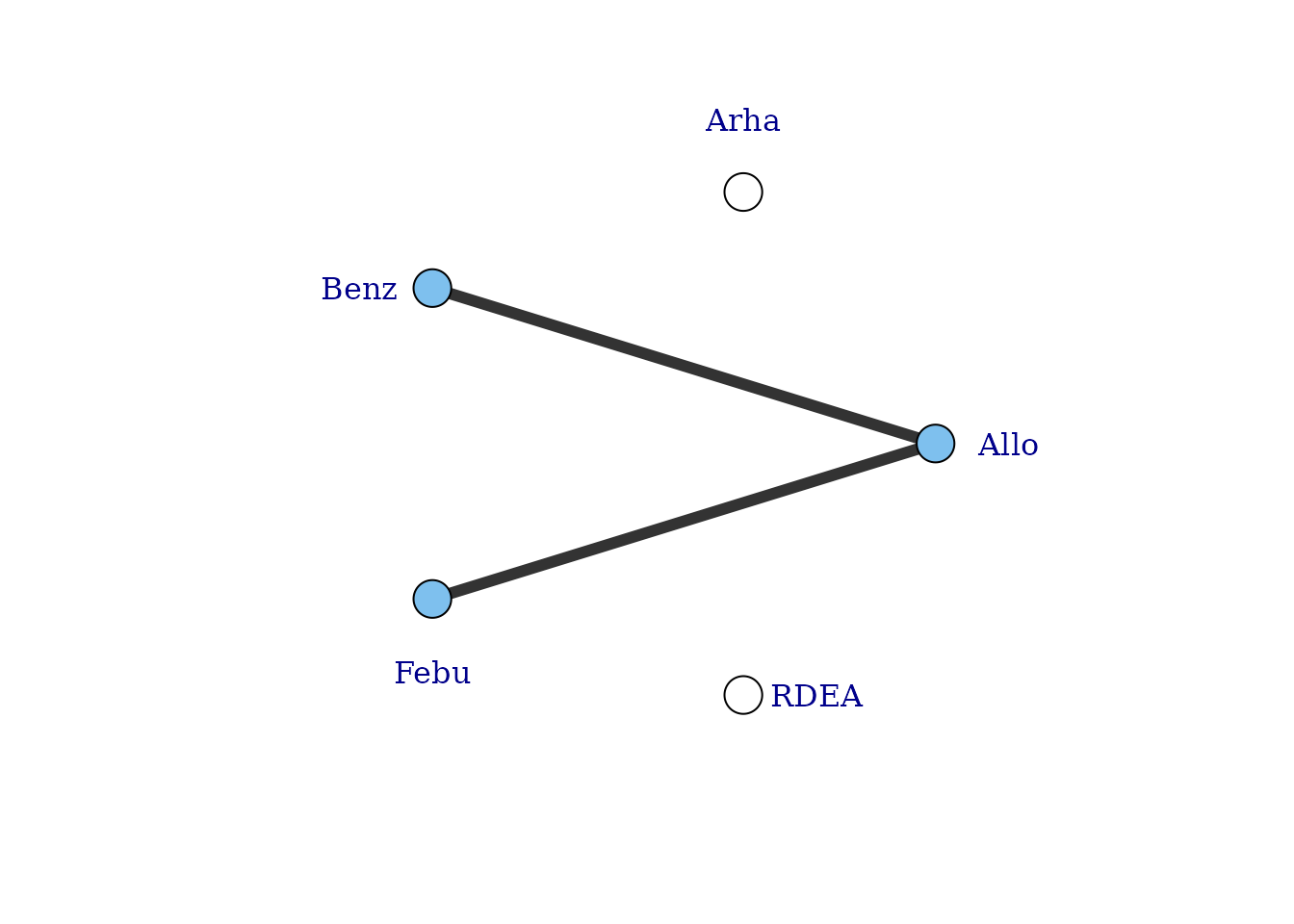

This results in a very disconnected network, and if we were to conduct a conventional “split” NMA (whereby different doses of an agent are considered to be independent), we would only be able to estimate relative effects for a very small number of treatments. However, if we assume a dose-response relationship then these different doses can be connected via this relationship, and we can connect up more treatments and agents in the network.

# Network plot at the agent level illustrates how doses can connect using MBNMA

plot(noplac.net, level="agent", remove.loops = TRUE, label.distance = 4)

#> Warning in check.network(g): The following treatments/agents are not connected

#> to the network reference:

#> Arha

#> RDEA

There are still two agents that do not connect to the network because they involve comparisons of different doses of the same agent. However, multiple doses of an agent within a study allow us to estimate the dose-response relationship and tell us something about the placebo (dose = 0) response - the number of different doses of an agent within a study will determine the degrees of freedom with which we are able to estimate a given dose-response function. Although the placebo response is not estimated directly in the MBNMA framework (it is modelled as a nuisance parameter), it allows us to connect the dose-response function estimated for an agent in one study, with that in another.

To visualise this, we can use the doselink argument in

plot(mbnma.network). The integer given to this argument

indicates the minimum number of doses from which a dose-response

function could be estimated, and is equivalent to the number of

parameters in the desired dose-response function plus one. For example

for an exponential function, we would require at least two doses on a

dose-response curve (including placebo), since this would allow one

degree of freedom with which to estimate the one-parameter dose-response

function. By modifying the doselink argument we can

determine the complexity of a dose-response function that we might

expect to be able to estimate whilst still connecting all agents within

the network.

If placebo is not included in the original dataset then this argument will also add a node for placebo to illustrate the connection.

# Network plot assuming connectivity via two doses

# Allows estimation of a single-parameter dose-response function

plot(noplac.net, level="agent", remove.loops = TRUE, label.distance = 4,

doselink=2)

#> Dose-response connections to placebo plotted based on a dose-response

#> function with 1 degrees of freedom

# Network plot assuming connectivity via three doses

# Allows estimation of a two-parameter dose-response function

plot(noplac.net, level="agent", remove.loops = TRUE, label.distance = 4,

doselink=3)

#> Warning in check.network(g): The following treatments/agents are not connected

#> to the network reference:

#> Allo

#> Arha

#> Benz

#> Febu

#> Dose-response connections to placebo plotted based on a dose-response

#> function with 2 degrees of freedom

In this way we can fully connect up treatments in an otherwise disconnected network, though unless informative prior information is used this will be limited by the number of doses of agents within included studies. See Pedder et al. (2021) for more details on this.

Non-parametric dose-response functions

In addition to the parametric dose-response functions described

above, a non-parametric monotonic dose-response relationship can also be

specified in mbnma.run(). fun=dnonparam() can

be used to specify a monotonically increasing

(direction="increasing") or decreasing

(direction="decreasing") dose-response respectively. This

is achieved in the model by imposing restrictions on the prior

distributions of treatment effects that ensure that each increasing dose

of an agent has an effect that is either the same or greater than the

previous dose. The approach results in a similar model to that developed

by Owen et al. (2015).

By making this assumption, this model is slightly more informative, and can lead to some slight gains in precision if relative effects are otherwise imprecisely estimated. However, because a functional form for the dose-response is not modeled, it cannot be used to connect networks that are disconnected at the treatment-level, unlike a parametric MBNMA.

In the case of MBNMA, it may be useful to compare the fit of a non-parametric model to that of a parametric dose-response function, to ensure that fitting a parametric dose-response function does not lead to significantly poorer model fit.

When fitting a non-parametric dose-response model, it is important to

correctly choose the expected direction of the monotonic

response, otherwise it can lead to computation error.

nonparam <- mbnma.run(tripnet, fun=dnonparam(direction="increasing"), method="random")

#> `likelihood` not given by user - set to `binomial` based on data provided

#> `link` not given by user - set to `logit` based on assigned value for `likelihood`

print(nonparam)

#> Inference for Bugs model at "/tmp/Rtmp0EKdhX/file1e2724103d13", fit using jags,

#> 3 chains, each with 20000 iterations (first 10000 discarded), n.thin = 10

#> n.sims = 3000 iterations saved

#> mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat

#> d.1[1,1] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000

#> d.1[1,2] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000

#> d.1[2,2] 1.190 0.176 0.843 1.073 1.190 1.306 1.537 1.001

#> d.1[3,2] 1.741 0.121 1.505 1.661 1.741 1.821 1.975 1.001

#> d.1[4,2] 2.048 0.142 1.773 1.954 2.046 2.143 2.325 1.001

#> d.1[1,3] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000

#> d.1[2,3] 0.945 0.139 0.639 0.855 0.960 1.045 1.186 1.002

#> d.1[3,3] 1.119 0.084 0.952 1.064 1.121 1.176 1.280 1.001

#> d.1[4,3] 1.260 0.112 1.053 1.181 1.257 1.336 1.481 1.002

#> d.1[5,3] 1.461 0.086 1.295 1.403 1.460 1.518 1.629 1.002

#> d.1[1,4] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000

#> d.1[2,4] 1.272 0.211 0.874 1.131 1.269 1.412 1.695 1.007

#> d.1[3,4] 1.658 0.327 1.101 1.422 1.636 1.862 2.346 1.006

#> d.1[1,5] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000

#> d.1[2,5] 0.626 0.240 0.147 0.458 0.635 0.803 1.068 1.001

#> d.1[3,5] 1.044 0.124 0.808 0.959 1.042 1.124 1.289 1.001

#> d.1[4,5] 1.450 0.217 1.072 1.291 1.440 1.593 1.900 1.001

#> d.1[1,6] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000

#> d.1[2,6] 0.785 0.307 0.134 0.572 0.815 1.024 1.287 1.001

#> d.1[3,6] 1.250 0.117 1.027 1.173 1.248 1.328 1.478 1.001

#> d.1[4,6] 1.549 0.189 1.216 1.417 1.531 1.674 1.955 1.006

#> d.1[5,6] 1.871 0.276 1.394 1.673 1.848 2.051 2.456 1.003

#> d.1[6,6] 2.903 0.601 1.847 2.461 2.864 3.298 4.202 1.001

#> d.1[1,7] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000

#> d.1[2,7] 0.555 0.203 0.174 0.416 0.554 0.689 0.967 1.006

#> d.1[3,7] 1.006 0.304 0.466 0.786 0.991 1.198 1.658 1.001

#> d.1[1,8] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000

#> d.1[2,8] 0.505 0.321 0.026 0.244 0.468 0.724 1.188 1.002

#> d.1[3,8] 1.273 0.160 0.956 1.165 1.275 1.386 1.574 1.003

#> d.1[4,8] 1.616 0.104 1.423 1.543 1.613 1.684 1.831 1.003

#> sd 0.260 0.046 0.172 0.228 0.259 0.290 0.352 1.004

#> totresdev 189.510 19.088 153.198 176.128 188.700 202.161 228.771 1.001

#> deviance 1092.616 19.088 1056.304 1079.234 1091.806 1105.267 1131.877 1.001

#> n.eff

#> d.1[1,1] 1

#> d.1[1,2] 1

#> d.1[2,2] 3000

#> d.1[3,2] 3000

#> d.1[4,2] 3000

#> d.1[1,3] 1

#> d.1[2,3] 1300

#> d.1[3,3] 3000

#> d.1[4,3] 1300

#> d.1[5,3] 1100

#> d.1[1,4] 1

#> d.1[2,4] 350

#> d.1[3,4] 370

#> d.1[1,5] 1

#> d.1[2,5] 3000

#> d.1[3,5] 3000

#> d.1[4,5] 3000

#> d.1[1,6] 1

#> d.1[2,6] 3000

#> d.1[3,6] 2700

#> d.1[4,6] 400

#> d.1[5,6] 820

#> d.1[6,6] 2300

#> d.1[1,7] 1

#> d.1[2,7] 2100

#> d.1[3,7] 3000

#> d.1[1,8] 1

#> d.1[2,8] 3000

#> d.1[3,8] 840

#> d.1[4,8] 780

#> sd 1500

#> totresdev 3000

#> deviance 3000

#>

#> For each parameter, n.eff is a crude measure of effective sample size,

#> and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

#>

#> DIC info (using the rule, pD = var(deviance)/2)

#> pD = 126.9 and DIC = 1218.7

#> DIC is an estimate of expected predictive error (lower deviance is better).In the output from non-parametric models, d.1 parameters

represent the relative effect for each treatment (specific dose of a

specific agent) versus the reference treatment, similar to in a standard

Network Meta-Analysis. The first index of d represents the

dose identifier, and the second index represents the agent identifier.

Information on the specific values of the doses is not included in the

model, as only the ordering of them (lowest to highest) is

important.

Note that some post-estimation functions (e.g. ranking, prediction) cannot be performed on non-parametric models within the package.

Post-Estimation

For looking at post-estimation in MBNMA we will demonstrate using results from an Emax MBNMA on the triptans dataset unless specified otherwise:

tripnet <- mbnma.network(triptans)

#> Values for `agent` with dose = 0 have been recoded to `Placebo`

#> agent is being recoded to enforce sequential numbering and allow inclusion of `Placebo`

trip.emax <- mbnma.run(tripnet, fun=demax(emax="rel", ed50="rel"))

#> 'ed50' parameters are on exponential scale to ensure they take positive values on the natural scale

#> `likelihood` not given by user - set to `binomial` based on data provided

#> `link` not given by user - set to `logit` based on assigned value for `likelihood`Deviance plots

To assess how well a model fits the data, it can be useful to look at

a plot of the contributions of each data point to the residual deviance.

This can be done using devplot(). As individual deviance

contributions are not automatically monitored in

parameters.to.save, this might require the model to be

automatically run for additional iterations.

Results can be plotted either as a scatter plot

(plot.type="scatter") or a series of boxplots

(plot.type="box").

# Plot boxplots of residual deviance contributions (scatterplot is the default)

devplot(trip.emax, plot.type = "box")

#> `resdev` not monitored in mbnma$parameters.to.save.

#> additional iterations will be run in order to obtain results for `resdev`

From these plots we can see that whilst the model fit does not seem to be systematically non-linear (which would suggest an alternative dose-response function may be a better fit), residual deviance is high at a dose of 1 for eletriptan, and at 2 for sumatriptan. This may indicate that fitting random effects may allow for additional variability in response which may improve the model fit.

If saved to an object, the output of devplot() contains

the results for individual deviance contributions, and this can be used

to identify any extreme outliers.

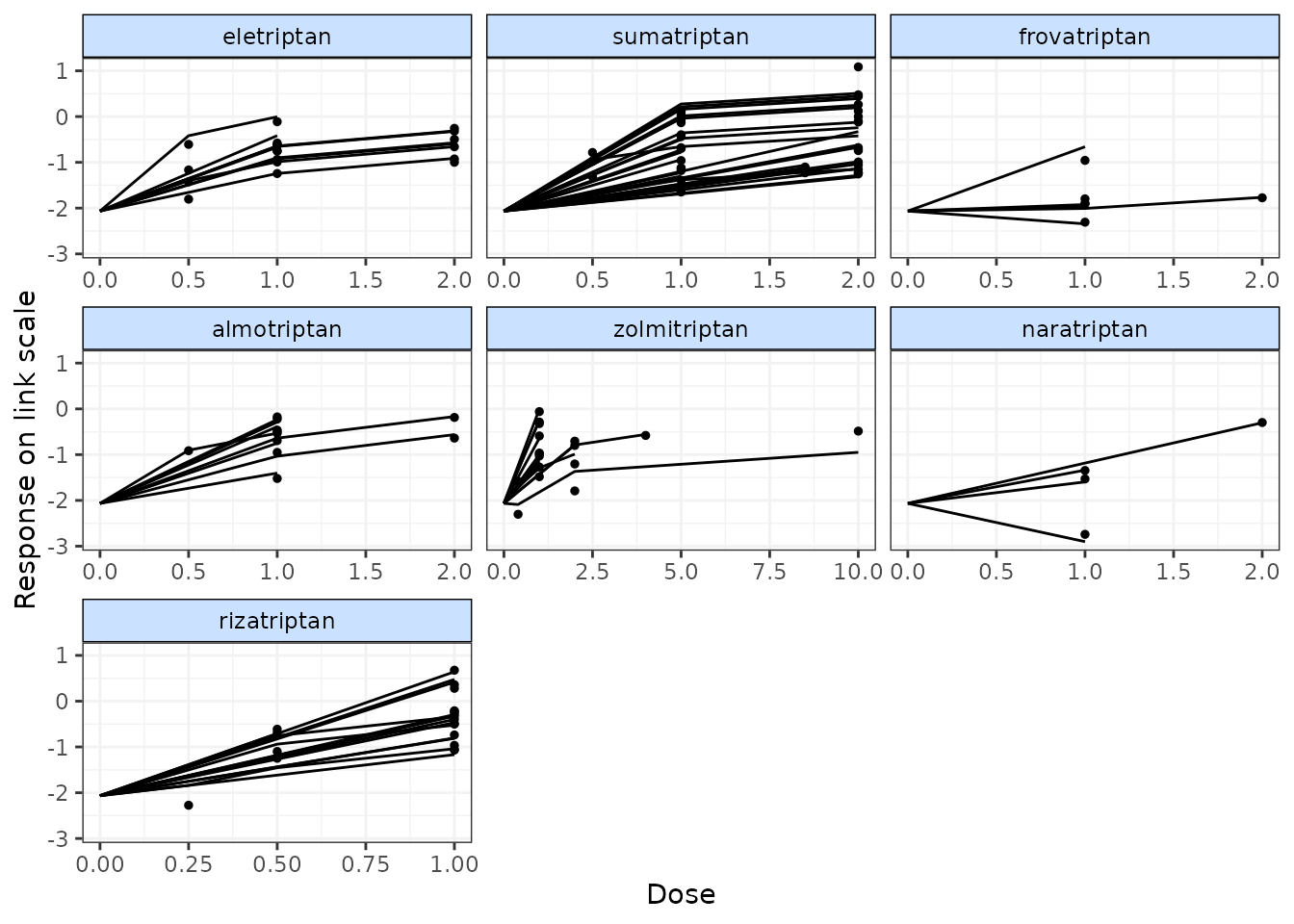

Fitted values

Another approach for assessing model fit can be to plot the fitted

values, using fitplot(). As with devplot(),

this may require running additional model iterations to monitor

theta.

# Plot fitted and observed values with treatment labels

fitplot(trip.emax)

#> `theta` not monitored in mbnma$parameters.to.save.

#> additional iterations will be run in order to obtain results

Fitted values are plotted as connecting lines and observed values in the original dataset are plotted as points. These plots can be used to identify if the model fits the data well for different agents and at different doses along the dose-response function.

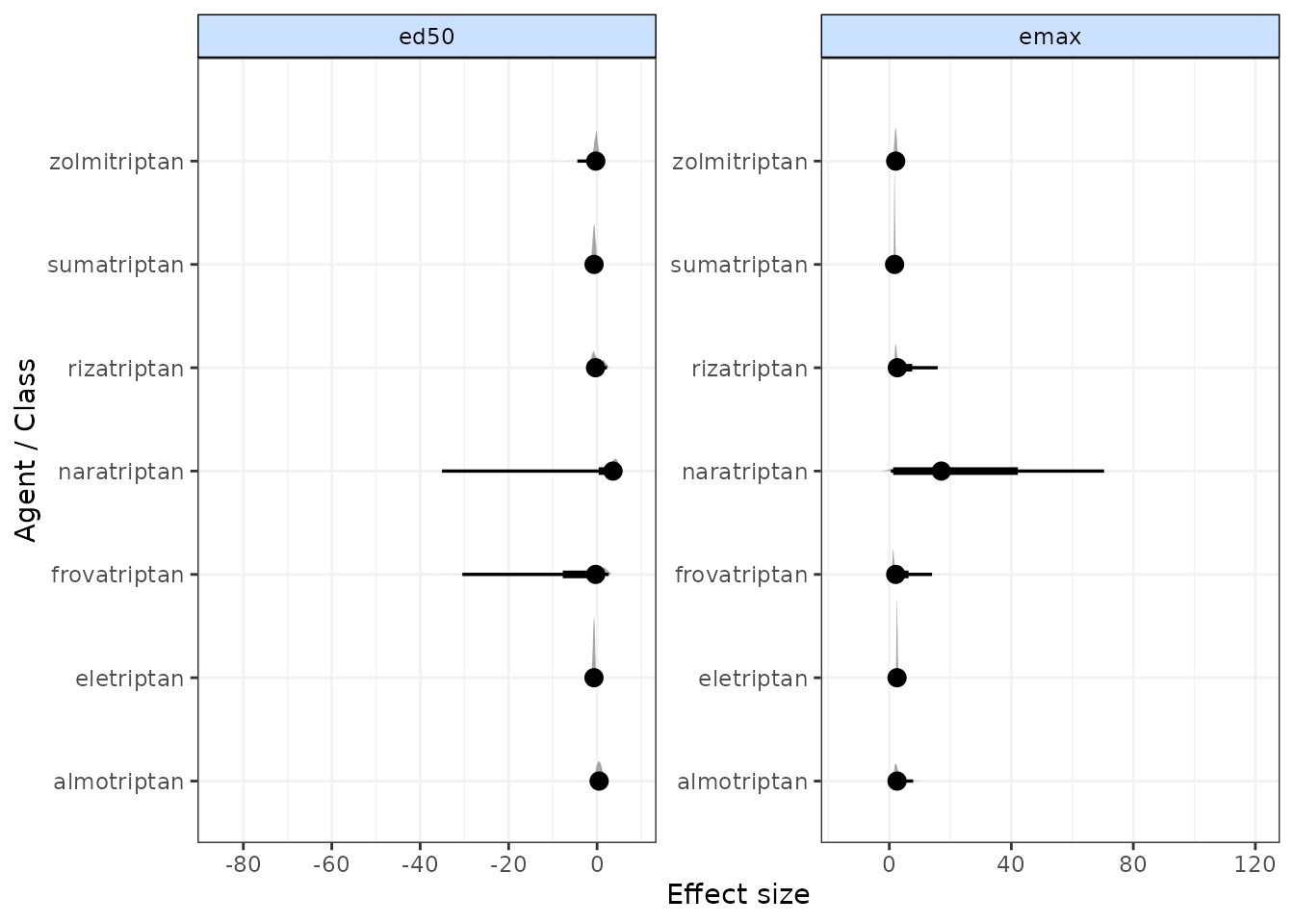

Forest plots

Forest plots can be easily generated from MBNMA models using the

plot() method on an "mbnma" object. By default

this will plot a separate panel for each dose-response parameter in the

model. Forest plots can only be generated for parameters which are

modelled using relative effects and that vary by agent/class.

plot(trip.emax)

Estimating relative effects

It may be of interest to calculate relative effects between different doses of agents to obtain outputs that are more similar to those from standard NMA. Relative effects can take the form of odds ratios, mean differences or rate ratios, depending on the likelihood and link function used in a model. Estimating relative effects can be particularly helpful for dose-response functions where parameter interpretation can be challenging (e.g. splines, fractional polynomials).

The get.relative() function allows for calculation of

relative effects between any doses of agents as specified by the user.

This includes doses not available in the original dataset, as these can

be estimated via the dose-response relationship. Optional arguments

allow for calculation of 95% prediction intervals rather than 95%

credible intervals (the default), and for the conversion of results from

the log to the natural scale. The resulting relative effects can also be

ranked (see Ranking for more details).

# Specify treatments (agents and doses) for which to estimate relative effects

treats <- list("Placebo"=0,

"eletriptan"= 1,

"sumatriptan"=2,

"almotriptan"=1)

# Print relative effects on the natural scale

rels <- get.relative(trip.emax, treatments = treats, eform=TRUE)

print(rels)

#> ============================================================

#> Relative treatment comparisons (95% credible intervals)

#> ============================================================

#>

#> Placebo_0 5.5 (4.8, 6.3) 4 (3.6, 4.4) 2.7 (2.3, 3.1)

#> 5.5 (4.8, 6.3) eletriptan_1 0.73 (0.63, 0.84) 0.49 (0.4, 0.59)

#> 4 (3.6, 4.4) 0.73 (0.63, 0.84) sumatriptan_2 0.67 (0.57, 0.78)

#> 2.7 (2.3, 3.1) 0.49 (0.4, 0.59) 0.67 (0.57, 0.78) almotriptan_1

# Rank relative effects

rank(rels)

#>

#> ================================

#> Ranking of dose-response MBNMA

#> ================================

#>

#> Includes ranking of relative effects between treatments from dose-response MBNMA

#>

#> 4 agents/classes/predictions ranked with negative responses being `worse`

#>

#> Relative Effects ranking (from best to worst)

#>

#> |Treatment | Mean| Median| 2.5%| 97.5%|

#> |:-------------|----:|------:|----:|-----:|

#> |eletriptan_1 | 1| 1| 1| 1|

#> |sumatriptan_2 | 2| 2| 2| 2|

#> |almotriptan_1 | 3| 3| 3| 3|

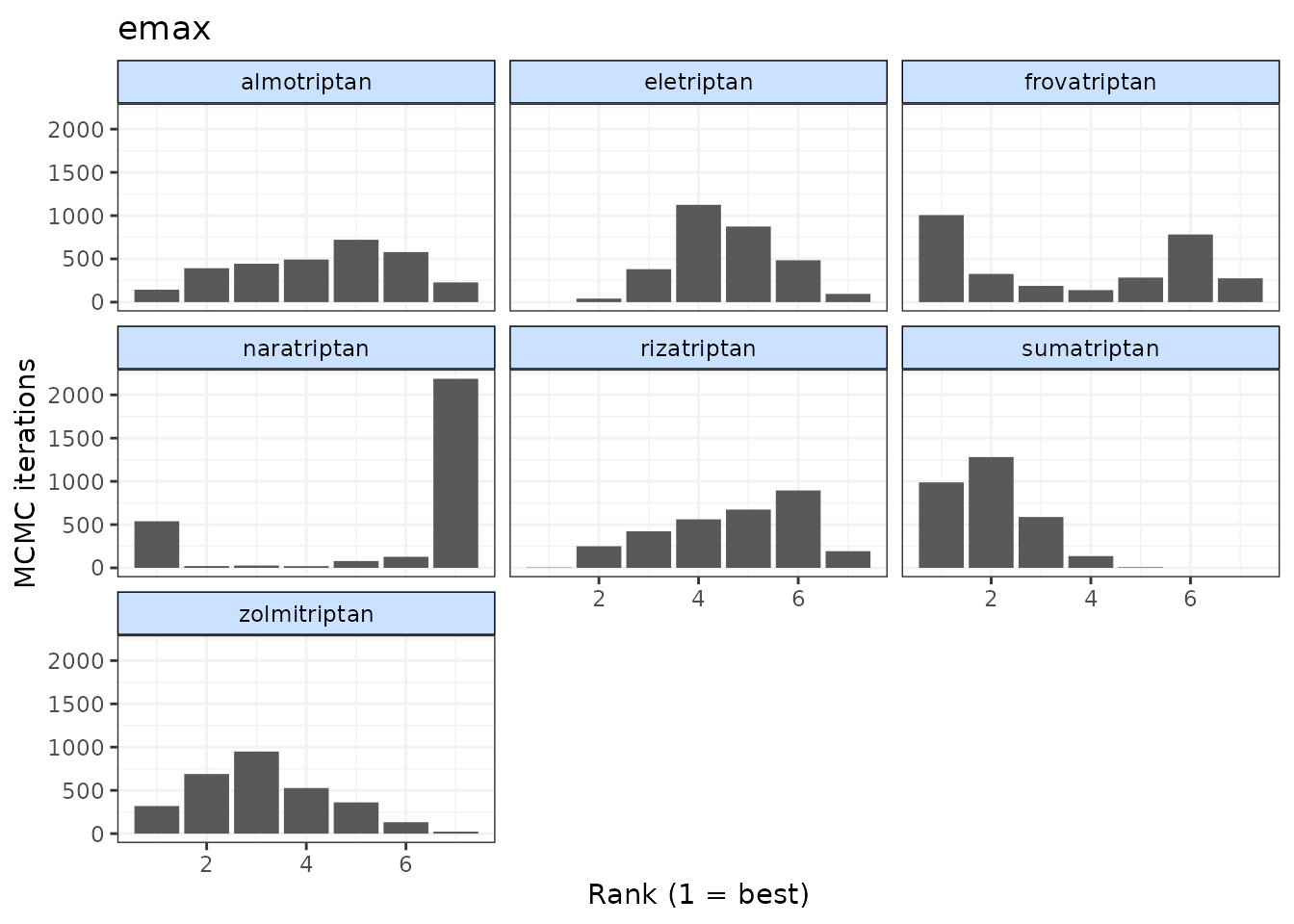

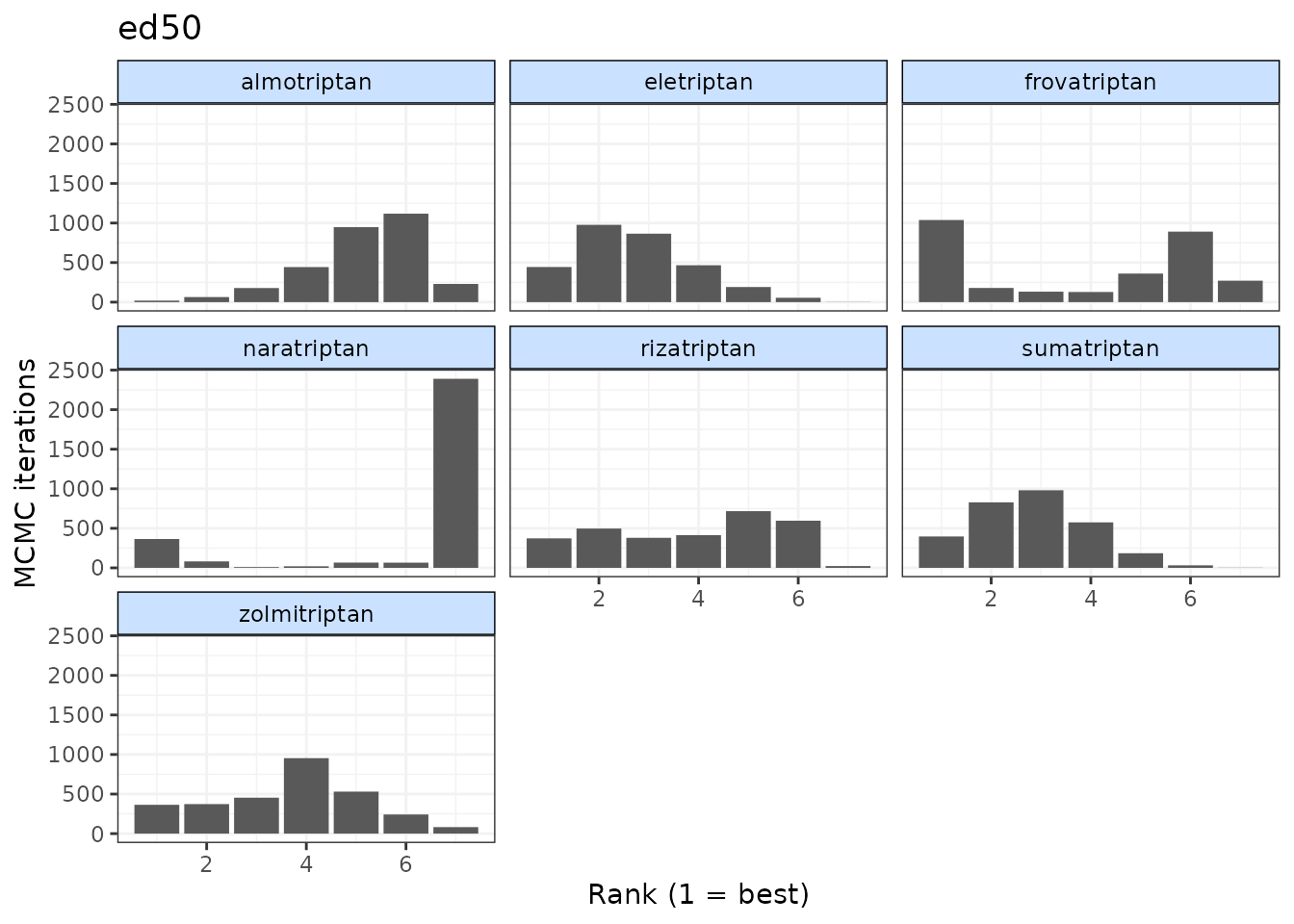

#> |Placebo_0 | 4| 4| 4| 4|Ranking

Rankings can be calculated for different dose-response parameters

from MBNMA models by using rank() on an

"mbnma" object. Any parameter monitored in an MBNMA model

that varies by agent/class can be ranked. A vector of these is assigned

to params. lower_better indicates whether

negative responses should be ranked as “better” (TRUE) or

“worse” (FALSE).

ranks <- rank(trip.emax, lower_better = FALSE)

print(ranks)

#>

#> ================================

#> Ranking of dose-response MBNMA

#> ================================

#>

#> Includes ranking of relative effects from dose-response MBNMA:

#> emax ed50

#>

#> 7 agents/classes/predictions ranked with positive responses being `better`

#>

#> emax ranking (from best to worst)

#>

#> |Treatment | Mean| Median| 2.5%| 97.5%|

#> |:------------|----:|------:|----:|-----:|

#> |sumatriptan | 1.96| 2| 1| 4|

#> |zolmitriptan | 3.14| 3| 1| 6|

#> |frovatriptan | 3.60| 3| 1| 7|

#> |almotriptan | 4.30| 5| 1| 7|

#> |eletriptan | 4.55| 4| 3| 7|

#> |rizatriptan | 4.70| 5| 2| 7|

#> |naratriptan | 5.74| 7| 1| 7|

#>

#>

#> ed50 ranking (from best to worst)

#>

#> |Treatment | Mean| Median| 2.5%| 97.5%|

#> |:------------|----:|------:|----:|-----:|

#> |eletriptan | 2.72| 3| 1| 5|

#> |sumatriptan | 2.81| 3| 1| 5|

#> |zolmitriptan | 3.66| 4| 1| 7|

#> |frovatriptan | 3.78| 5| 1| 7|

#> |rizatriptan | 3.83| 4| 1| 6|

#> |almotriptan | 5.17| 5| 2| 7|

#> |naratriptan | 6.03| 7| 1| 7|

summary(ranks)

#> $emax

#> rank.param mean sd 2.5% 25% 50% 75% 97.5%

#> 1 eletriptan 4.554667 1.0469210 3 4 4 5 7

#> 2 sumatriptan 1.964333 0.8524299 1 1 2 2 4

#> 3 frovatriptan 3.605000 2.3072529 1 1 3 6 7

#> 4 almotriptan 4.301000 1.6459958 1 3 5 6 7

#> 5 zolmitriptan 3.136667 1.3463258 1 2 3 4 6

#> 6 naratriptan 5.735667 2.3234157 1 6 7 7 7

#> 7 rizatriptan 4.702667 1.4107644 2 4 5 6 7

#>

#> $ed50

#> rank.param mean sd 2.5% 25% 50% 75% 97.5%

#> 1 eletriptan 2.718667 1.193591 1 2 3 3 5

#> 2 sumatriptan 2.809000 1.146425 1 2 3 4 5

#> 3 frovatriptan 3.784000 2.339480 1 1 5 6 7

#> 4 almotriptan 5.170333 1.152864 2 5 5 6 7

#> 5 zolmitriptan 3.656333 1.539161 1 3 4 5 7

#> 6 naratriptan 6.033333 2.086865 1 7 7 7 7

#> 7 rizatriptan 3.828333 1.726999 1 2 4 5 6The output is an object of class("mbnma.rank"),

containing a list for each ranked parameter in params,

which consists of a summary table of rankings and raw information on

agent/class (depending on argument given to level) ranking

and probabilities. The summary median ranks with 95% credible intervals

can be simply displayed using summary().

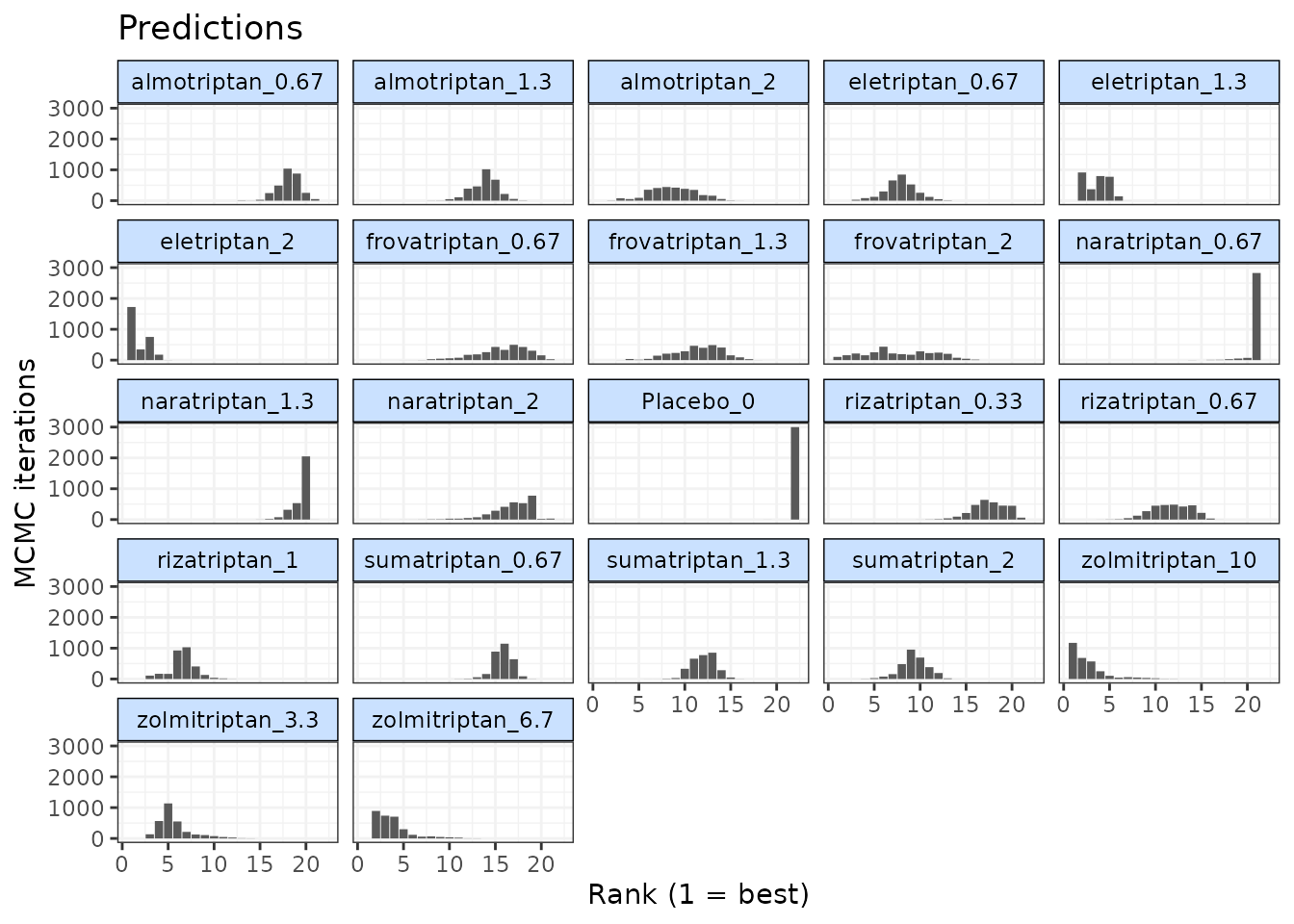

Histograms for ranking results can also be plotted using the

plot() method, which takes the raw MCMC ranking results

stored in mbnma.rank and plots the number of MCMC

iterations the parameter value for each treatment was ranked a

particular position.

# Ranking histograms for Emax

plot(ranks, params = "emax")

# Ranking histograms for ED50

plot(ranks, params = "ed50")

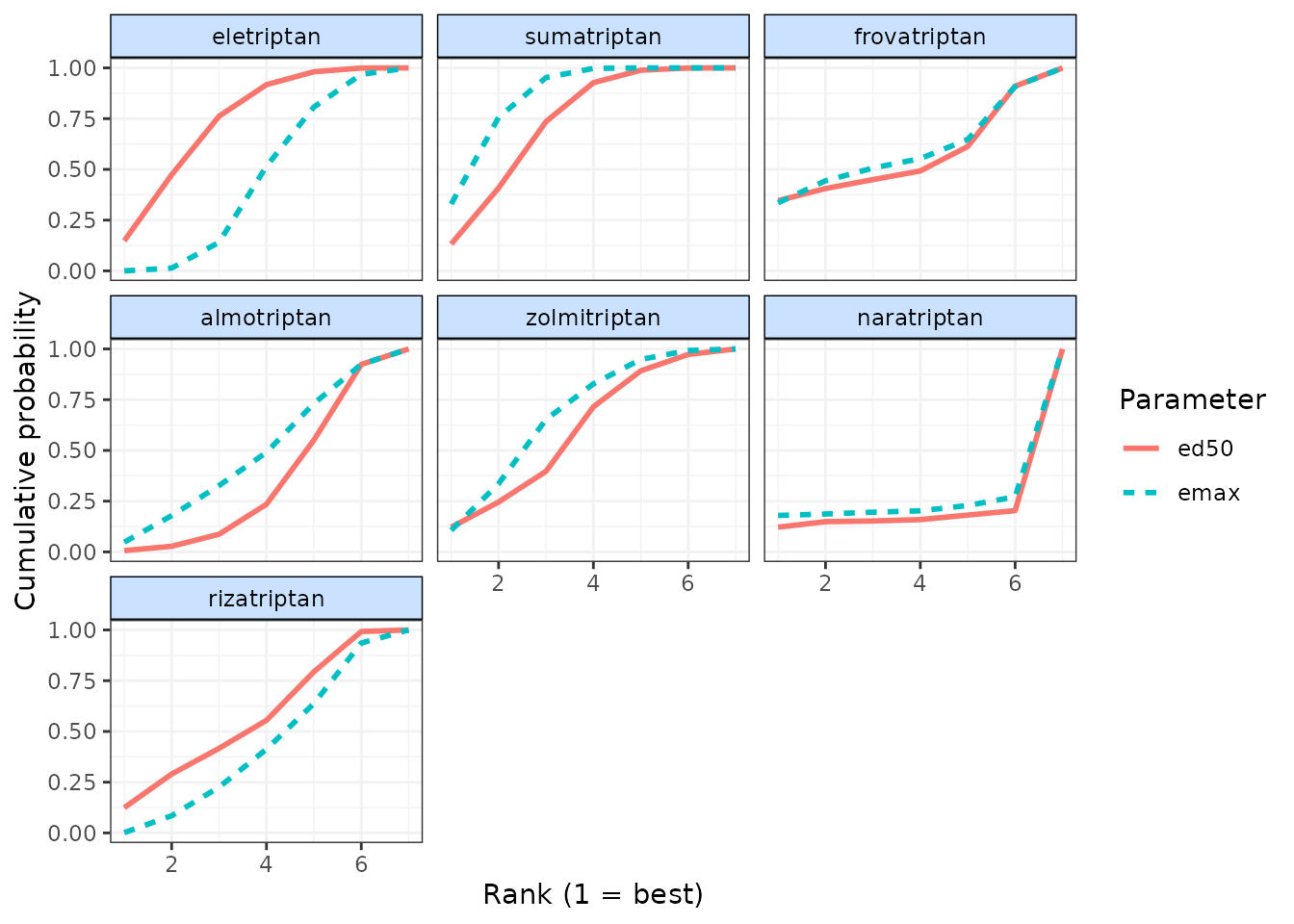

Alternatively, cumulative ranking plots for all parameters can be

plotted simultaneously so as to be able to compare the effectiveness of

different agents on different parameters. The surface under cumulative

ranking curve (SUCRA) for each parameter can also be estimated by

setting sucra=TRUE.

# Cumulative ranking plot for both dose-response parameters

cumrank(ranks, sucra=TRUE)

#> # A tibble: 14 × 3

#> agent parameter sucra

#> <fct> <chr> <dbl>

#> 1 eletriptan ed50 4.71

#> 2 eletriptan emax 2.95

#> 3 sumatriptan ed50 4.62

#> 4 sumatriptan emax 5.37

#> 5 frovatriptan ed50 3.54

#> 6 frovatriptan emax 3.73

#> 7 almotriptan ed50 2.33

#> 8 almotriptan emax 3.18

#> 9 zolmitriptan ed50 3.78

#> 10 zolmitriptan emax 4.31

#> 11 naratriptan ed50 1.41

#> 12 naratriptan emax 1.67

#> 13 rizatriptan ed50 3.61

#> 14 rizatriptan emax 2.80Prediction

After performing an MBNMA, responses can be predicted from the model

parameter estimates using predict() on an

"mbnma" object. A number of important arguments should be

specified for prediction. See ?predict.mbnma for detailed

specification of these arguments.

E0 This is the response at dose = 0 (equivalent to the

placebo response). Since relative effects are the parameters estimated

in MBNMA, the placebo response is not explicitly modelled and therefore

must be provided by the user in some way. The simplest approach is to

provide either a single numeric value for E0 (deterministic

approach), or a string representing a distribution for E0

that can take any Random Number Generator (RNG) distribution for which a

function exists in R (stochastic approach). Values should be given on

the natural scale. For example for a binomial outcome:

- Deterministic:

E0 <- 0.2 - Stochastic:

E0 <- "rbeta(n, shape1=2, shape2=10)"

Another approach is to estimate E0 from a set of

studies. These would ideally be studies of untreated/placebo-treated

patients that closely resemble the population for which predictions are

desired, and the studies may be observational. However, synthesising

results from the placebo arms of trials in the original network is also

possible. For this, E0 is assigned a data frame of studies

in the long format (one row per study arm) with the variables

studyID, and a selection of y,

se, r, n and E

(depending on the likelihood used in the MBNMA model).

synth can be set to "fixed" or

"random" to indicate whether this synthesis should be fixed

or random effects.

E0 <- triptans[triptans$dose==0,]Additionally, it’s also necessary to specify the doses at which to

predict responses. By default, predict() uses the maximum

dose within the dataset for each agent, and predicts doses at a series

of cut points. The number of cut points can be specified using

n.doses, and the maximum dose to use for prediction for

each agent can also be specified using max.doses (a named

list of numeric values where element names correspond to agent

names).

An alternative approach is to predict responses at specific doses for

specific agents using the argument exact.doses. As with

max.doses, this is a named list in which element names

correspond to agent names, but each element is a numeric vector in which

each value within the vector is a dose at which to predict a response

for the given agent.

# Predict 20 doses for each agent, with a stochastic distribution for E0

doses <- list("Placebo"=0,

"eletriptan"=3,

"sumatriptan"=3,

"almotriptan"=3,

"zolmitriptan"=3,

"naratriptan"=3,

"rizatriptan"=3)

pred <- predict(trip.emax, E0="rbeta(n, shape1=2, shape2=10)",

max.doses=doses, n.dose=20)

# Predict exact doses for two agents, and estimate E0 from the data

E0.data <- triptans[triptans$dose==0,]

doses <- list("eletriptan"=c(0,1,3),

"sumatriptan"=c(0,3))

pred <- predict(trip.emax, E0=E0.data,

exact.doses=doses)

#> Values for `agent` with dose = 0 have been recoded to `Placebo`

#> agent is being recoded to enforce sequential numbering and allow inclusion of `Placebo`An object of class "mbnma.predict" is returned, which is

a list of summary tables and MCMC prediction matrices for each treatment

(combination of dose and agent). The summary() method can

be used to print mean posterior predictions at each time point for each

treatment.

summary(pred)

#> agent dose mean sd 2.5% 25% 50%

#> 1 Placebo 0 0.1238613 0.003372775 0.1174496 0.1214747 0.1238825

#> 2 eletriptan 0 0.1238613 0.003372775 0.1174496 0.1214747 0.1238825

#> 3 eletriptan 1 0.4359085 0.018714169 0.3992969 0.4236221 0.4359272

#> 4 eletriptan 3 0.5558782 0.027120490 0.5026114 0.5378783 0.5557909

#> 5 sumatriptan 0 0.1238613 0.003372775 0.1174496 0.1214747 0.1238825

#> 6 sumatriptan 3 0.3850457 0.018546501 0.3500641 0.3727659 0.3844962

#> 75% 97.5%

#> 1 0.1260667 0.1305596

#> 2 0.1260667 0.1305596

#> 3 0.4482241 0.4728438

#> 4 0.5737819 0.6091708

#> 5 0.1260667 0.1305596

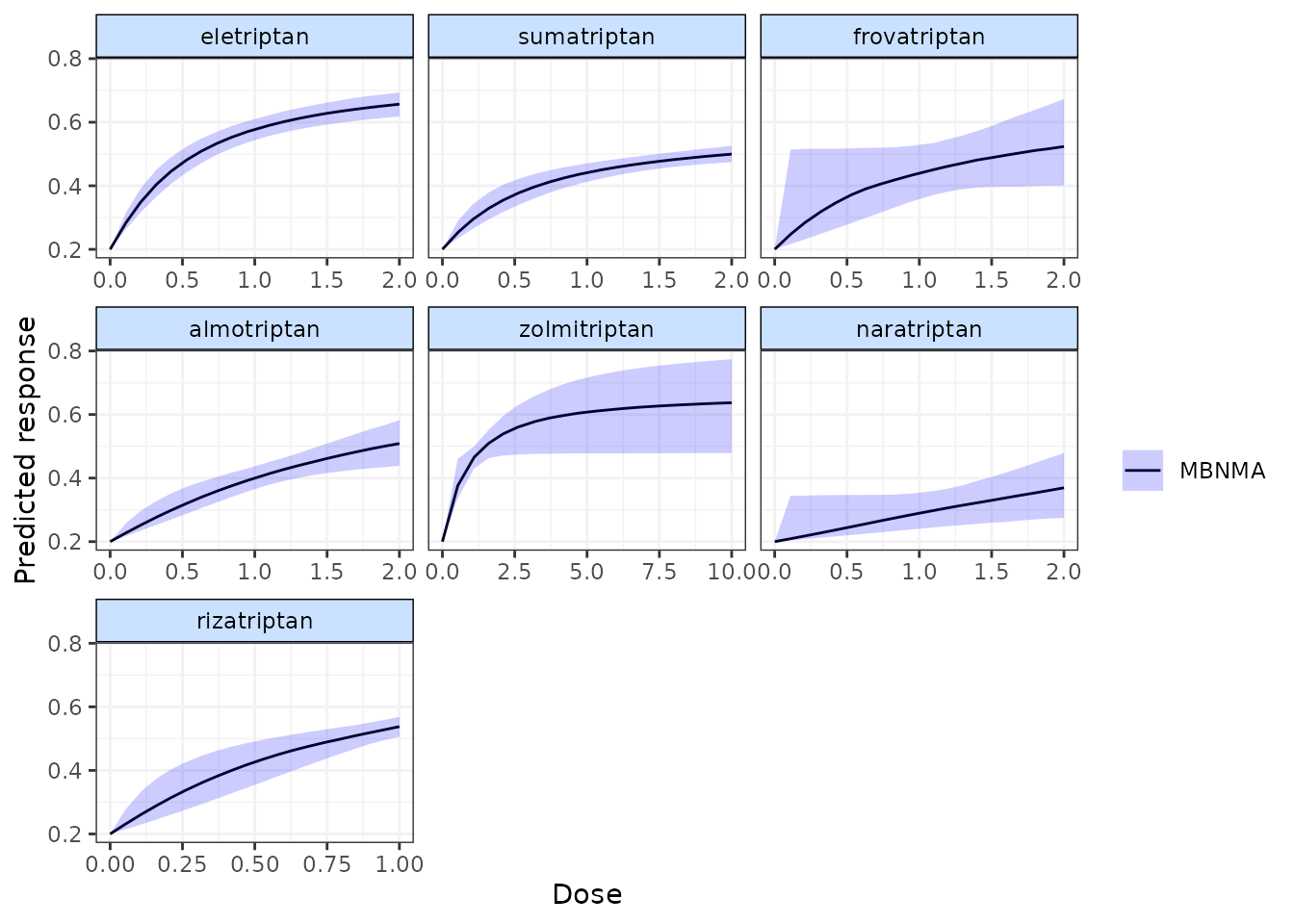

#> 6 0.3969540 0.4226329Plotting predicted responses

Predicted responses can also be plotted using the plot()

method on an object of class("mbnma.predict"). The

predicted responses are joined by a line to form the dose-response curve

for each agent predicted, with 95% credible intervals (CrI). Therefore,

when plotting the response it is important to predict a sufficient

number of doses (using n.doses) to get a smooth curve.

# Predict responses using default doses up to the maximum of each agent in the dataset

pred <- predict(trip.emax, E0=0.2, n.dose=20)

plot(pred)

Shaded counts of the number of studies in the original dataset that

investigate each dose of an agent can be plotted over the 95% CrI for

each treatment by setting disp.obs = TRUE, though this

requires that the original "mbnma.network" object used to

estimate the MBNMA be provided via network.

plot(pred, disp.obs = TRUE)

#> 66 placebo arms in the dataset are not shown within the plots

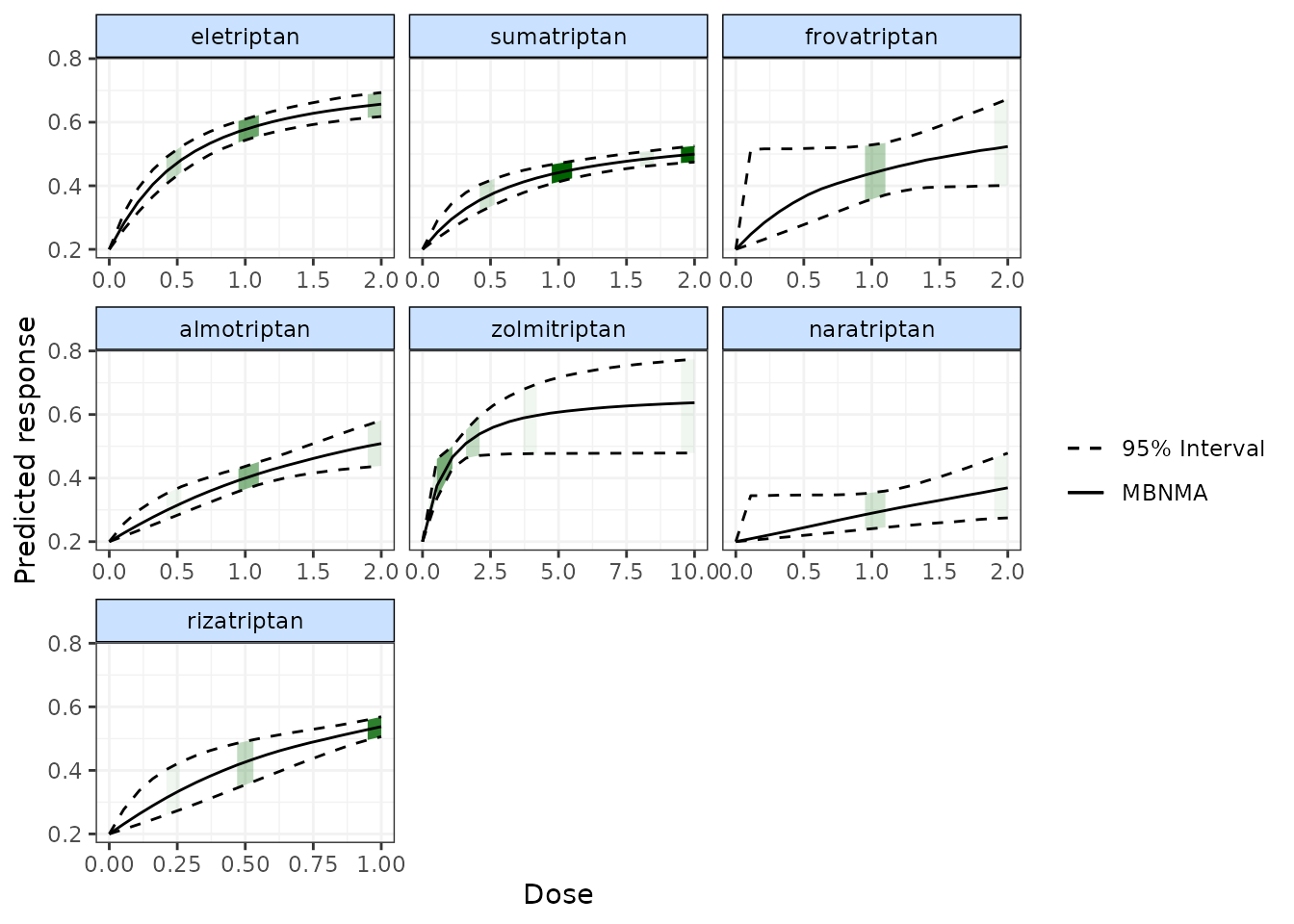

This can be used to identify any extrapolation/interpretation of the dose-response that might be occurring for a particular agent. As you can see, more observations typically leads to tighter 95% CrI for the predicted response at a particular point along the dose-response curve.

We can also plot the results of a “split” Network Meta-Analysis (NMA)

in which all doses of an agent are assumed to be independent. As with

disp.obs we also need to provide the original

mbnma.network object to be able to estimate this, and we

can also specify if we want to perform a common or random effects NMA

using method. Treatments that are only connected to the

network via the dose-response relationship (rather than by a direct

head-to-head comparison) will not be included.

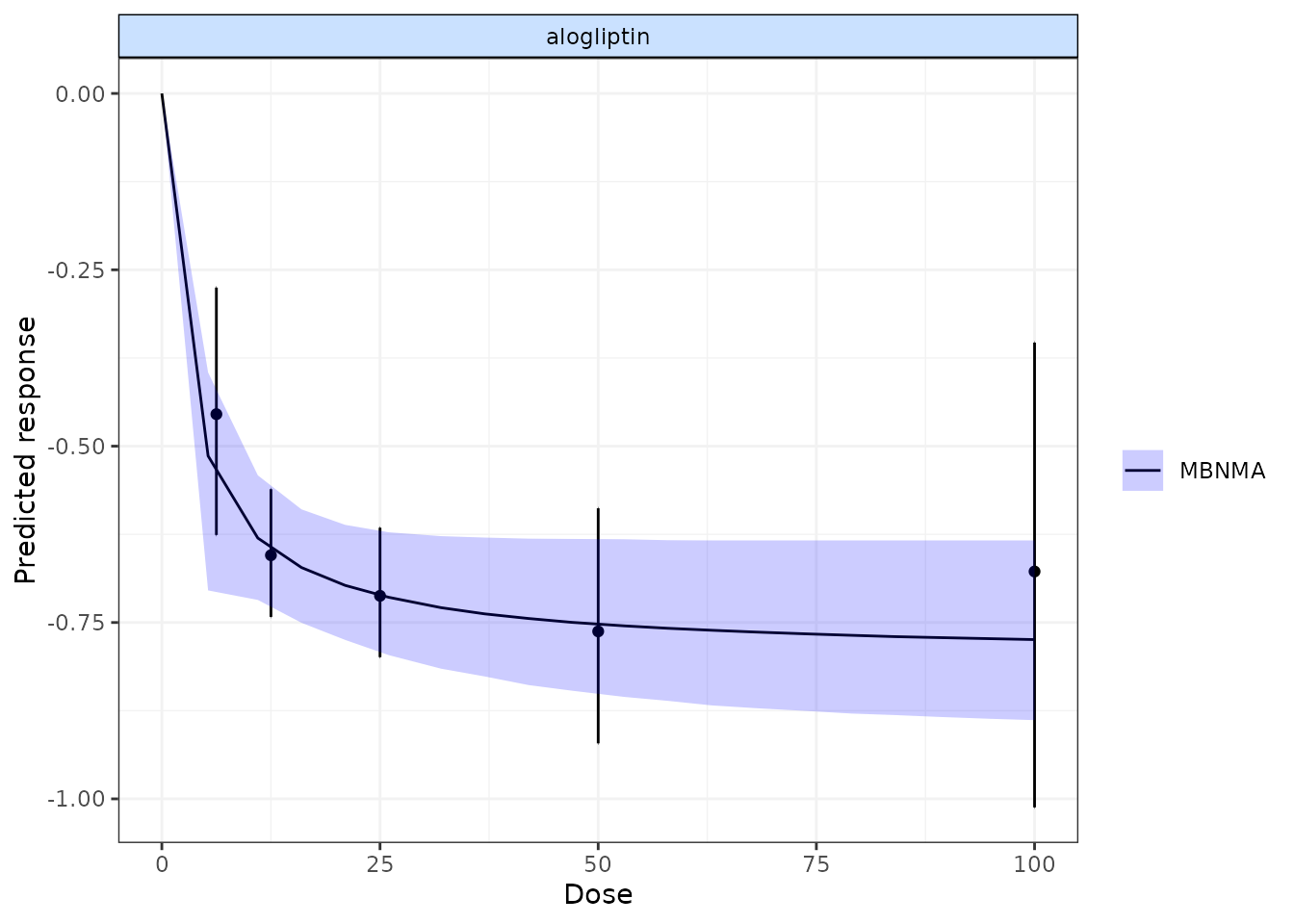

alognet <- mbnma.network(alog_pcfb)

alog.emax <- mbnma.run(alognet, fun=demax(), method="random")

pred <- predict(alog.emax, E0=0, n.dose=20)

plot(pred, overlay.split = TRUE, method="random")